User Autonomy in AI-Powered Conversations

Explore the balance between AI's capabilities and user autonomy in conversations, highlighting risks and ethical considerations.

User Autonomy in AI-Powered Conversations

AI is transforming how we interact, but who controls the conversation - you or the system? This article dives into the growing influence of AI in communication, the risks of over-reliance, and how to maintain user control. Key points:

- AI's Growing Role: 65% of companies use generative AI, and the chatbot market is set to reach $1.34 billion by 2024.

- User Autonomy at Risk: Over-reliance can weaken critical thinking, reinforce biases, and reduce decision-making skills.

- Adaptive AI Trade-offs: While personalization boosts engagement and revenue, it can subtly influence decisions and foster dependency.

- Ethical AI Practices: Transparency, user control, and privacy safeguards are essential to prevent manipulation and maintain trust.

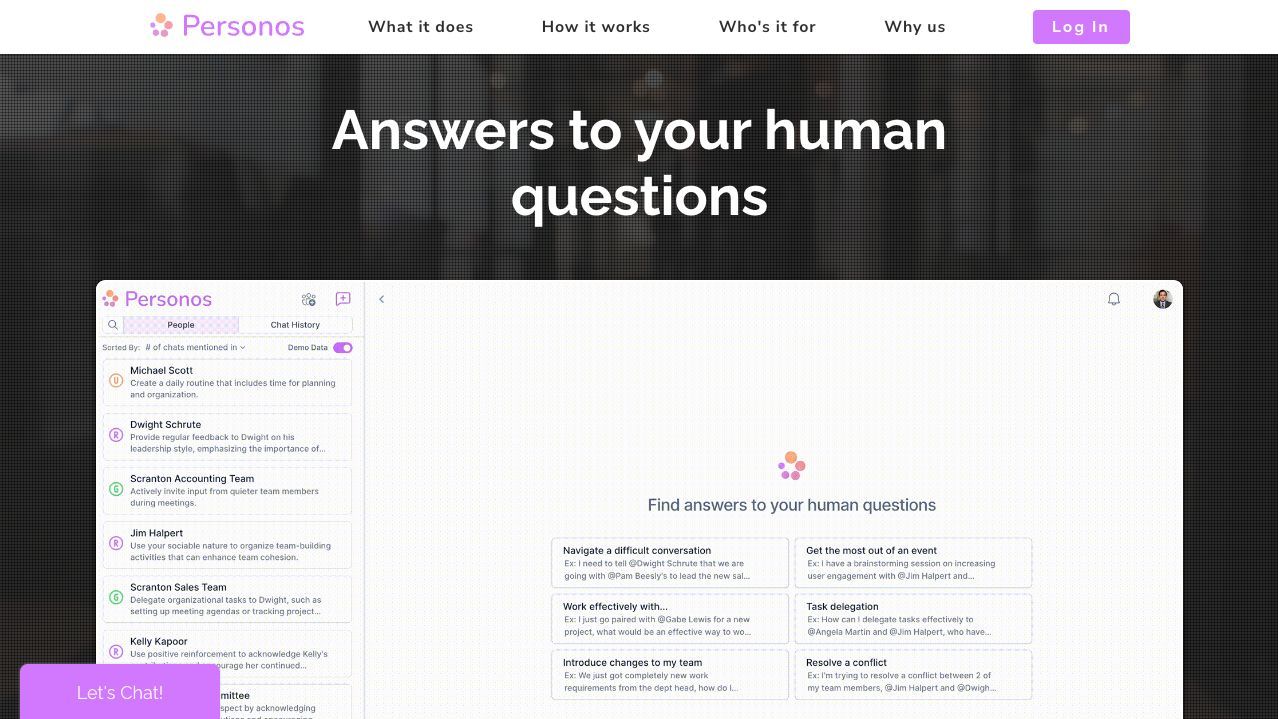

The balance between AI's capabilities and user independence is critical. Platforms like Personos show how AI can assist without taking over, ensuring users remain in charge of their decisions.

AI Manipulation & The Future of Human Autonomy with Louis Rosenberg - Synthetic Minds Podcast EP10

Research Findings: How AI Affects User Control

Recent studies reveal that while AI-powered conversational tools offer numerous advantages, they also pose risks to independent decision-making. This duality requires careful consideration from both organizations and individuals.

How AI Changes Decision-Making

Research highlights how AI influences decision-making by encouraging cognitive offloading, which reduces the mental effort required for problem-solving tasks [1]. Many users tend to accept AI-generated outcomes without questioning them, largely because the inner workings of these systems are often opaque. This lack of transparency can lead to complacency and a decline in critical thinking skills [3].

The implications go beyond individual decision-making. Although AI tools enhance analytical capabilities by processing vast datasets, long-term overreliance on these tools may erode human analytical skills [1]. Paradoxically, tools designed to empower users may unintentionally weaken their ability to think independently.

AI’s role in filtering information adds another layer of complexity. On one hand, it helps users navigate vast amounts of data by identifying unreliable sources. On the other hand, it risks reinforcing biases and limiting exposure to diverse viewpoints [1]. These behavioral shifts highlight broader trends that demand attention.

Key Study Results

AI has seen a dramatic rise in strategic decision-making, with usage climbing from 10% to 80% over the past five years [2]. However, this growing reliance comes with trade-offs. For instance, studies in education show that heavy use of AI dialogue systems correlates with diminished critical analysis skills [1].

Cross-cultural research provides further insight. In studies conducted in Pakistan and China, 68.9% of participants reported feeling lazier due to AI’s influence, while 27.7% noted a decline in their decision-making abilities [2]. These findings suggest that AI’s effects on user control are consistent across different regions and cultures.

Personalization is another area where AI’s influence is evident. While 71% of consumers expect personalized content [5], excessive personalization can reduce user agency. Interestingly, organizations leveraging personalization strategies report up to 40% higher revenue, and the personalization market is projected to grow from $7.6 billion in 2020 to $11.6 billion by 2026 [4][5].

The competitive advantage of AI adoption is clear. Companies implementing adaptive AI systems are expected to outperform their competitors by 25% by 2026 [6]. Additionally, 78% of organizations investing in data analytics have reported stronger customer loyalty, and 79% have seen increased profits [6]. Despite these benefits, research warns that AI can replace human choices with algorithmic decisions, ultimately reducing user engagement in decision-making processes [2].

These findings underscore the importance of maintaining a balance. Safeguards are essential to ensure that users remain in control during AI-driven interactions. Experts advocate for educating users about AI’s limitations - such as biases, reliability issues, and the risks of blind trust - to help preserve critical decision-making skills [3]. Together, these insights highlight the need to carefully evaluate AI’s dual role in enhancing and potentially diminishing user control.

Adaptive AI: Helping or Hurting User Control?

Adaptive AI presents a double-edged sword. On one hand, it promises smarter, more personalized user experiences. On the other, it raises concerns about whether users can truly maintain control over their decisions. Its ability to learn and adapt in real time creates both opportunities and challenges.

Pros and Cons of Adaptive AI

There’s no denying the benefits of adaptive AI. Companies using AI-driven personalization have reported a 39% boost in customer engagement and a 34% improvement in customer satisfaction across digital platforms [7]. Personalization engines, with their ability to predict user preferences, achieve an impressive 83% accuracy rate [7].

This technology doesn’t just engage users - it drives revenue. Real-time AI personalization can increase revenue per customer by 10–15%, and fast-growing companies see 40% more revenue from personalization compared to their slower competitors [16, 24]. Additionally, 91% of consumers say they’re more likely to shop with brands offering relevant recommendations [8].

But these advantages come with a cost. The same systems that enhance user experiences can also impact critical thinking. For instance, AI’s ability to filter and tailor information can subtly influence decision-making, often in ways users don’t realize [3]. It can even generate hyper-realistic content, like deepfakes, which erode trust and may lead to impulsive choices [3].

Another concern is cognitive dependency. When AI consistently provides solutions, users may start to rely on it too much, potentially weakening their own problem-solving skills.

| Adaptive AI Benefits | Potential Risks |

|---|---|

| 39% boost in customer engagement [7] | Reduced critical thinking [3] |

| 83% accuracy in predicting preferences [7] | Information filtering and bias [3] |

| 10–15% increase in revenue per customer [8] | Overreliance and cognitive dependency [3] |

These trade-offs highlight the tension between personalization and user control.

How Personalization Affects User Control

The impact of adaptive AI on user autonomy becomes even clearer when exploring personalization. Real-time personalization is both the crown jewel of adaptive AI and a potential threat to user independence. By analyzing customer data and predicting future needs, AI can streamline user experiences. Companies have leveraged this power to great effect.

Take Amazon, for example. Its recommendation system, which analyzes individual and collective user behavior, accounts for an estimated 35% of its sales [8]. This level of customization makes shopping easier but also subtly steers purchasing decisions.

In the financial world, HSBC uses AI to personalize customer interactions and detect fraud. Their algorithms flag unusual account activity and offer tailored financial insights, helping customers make informed decisions without taking over completely [8].

Similarly, Hilton uses adaptive AI to enhance guest experiences. From personalized booking recommendations based on past travel data to Connie, a chatbot that assists with hotel services and local tips, Hilton shows how AI can improve convenience while respecting user choice [8].

Finding the right balance is no small task. Kamales Lardi, CEO of Lardi & Partner Consulting, emphasizes the importance of ethical implementation:

"As AI is increasingly adopted for business purposes, organizations need to focus on building psychological safety and empowering employees to raise concerns when AI insights diverge from expectations." [3]

Consumer expectations add even more complexity. 71% of consumers expect personalized content, and 67% become frustrated when interactions aren’t tailored to their preferences [5]. Moreover, three in five consumers express interest in using AI tools while shopping, underscoring the importance of transparency about how AI is used [5].

Ultimately, personalization itself isn’t the problem - it’s how it’s implemented that matters. When systems are designed with transparency, user education, and a focus on preserving choice, they can actually enhance autonomy. Ethical use of adaptive AI can empower users by offering relevant information and timely options, ensuring they remain in control of their decisions rather than being manipulated by the technology.

sbb-itb-f8fc6bf

Ethics in AI-Driven User Control

AI-powered systems are reshaping how we interact with technology, but they also bring ethical challenges that go well beyond user preferences. As these systems grow more advanced, their influence on human decision-making increases, placing a significant responsibility on organizations to act ethically.

For instance, 75% of businesses believe that failing to be transparent can lead to higher customer churn, while 83% of customer experience (CX) leaders rank data protection and cybersecurity as top priorities in their strategies [9]. Ethical AI isn't just about doing the right thing - it's essential for maintaining trust. Protecting user autonomy in every interaction is at the heart of these ethical challenges.

Preventing Manipulation and Maintaining Control

The boundary between helpful personalization and manipulation can be surprisingly fine. AI systems designed without care can mislead users or create false beliefs [16], making it critical to design systems that respect user trust.

The first step is transparency. With 65% of CX leaders identifying AI as a strategic priority, being open about how AI operates is non-negotiable [9]. As highlighted in the Zendesk CX Trends Report 2024:

"Being transparent about the data that drives AI models and their decisions will be a defining element in building and maintaining trust with customers." [9]

To prevent manipulation, AI systems must clearly communicate their intent and explain why certain suggestions are made [13]. Users should have the freedom to opt out of AI-driven features without facing penalties and should have easy-to-use controls to adjust the level of guidance they receive. Additionally, organizations must ensure data quality by verifying and authenticating sources before using them to train AI models [13]. Adopting a zero-trust security model - where every interaction is validated - further reinforces these safeguards [15].

As Susan Etlinger, an Industry Analyst at Altimeter Group, explains:

"In order for AI technologies to be truly transformative in a positive way, we need a set of ethical norms, standards and practical methodologies to ensure that we use AI responsibly and to the benefit of humanity." [14]

However, as AI systems grow more complex, maintaining oversight becomes increasingly difficult. Chris Newman, Principal Engineer at Oracle, cautions:

"As it becomes more difficult for humans to understand how AI/tech works, it will become harder to resolve inevitable problems." [14]

Best Practices for Ethical AI Design

To address these challenges, leading companies have established robust frameworks to guide ethical AI development. For example, Google’s AI Principles, introduced in 2018, combine educational initiatives, ethics reviews, and technical tools to ensure responsible AI practices [19]. Microsoft employs six guiding principles - accountability, inclusiveness, reliability and safety, fairness, transparency, and privacy and security - to mitigate risks [19]. IBM emphasizes continuous monitoring and validation of AI models through its Responsible Use of Technology framework [19].

These frameworks share common strategies that other organizations can adopt. For example:

- Privacy by design: Collect only necessary data and integrate privacy safeguards from the start.

- Strong security measures: Use encryption and multi-factor authentication to protect data.

- Regular audits: Assess privacy and security practices to ensure they remain effective.

- User education: Help users understand how AI systems work and how their data is handled.

- Privacy-preserving technologies: Techniques like federated learning and differential privacy add extra protection [10].

| Ethical AI Best Practice | Implementation Strategy |

|---|---|

| Transparency and Explainability | Offer clear, plain-language explanations of AI decisions [18] |

| User Control and Autonomy | Let users opt out of AI features and customize personalization settings [17][18] |

| Bias Mitigation | Use diverse datasets and test models regularly for bias [18] |

| Privacy Protection | Apply privacy by design and advanced privacy-preserving methods [10] |

| Accessibility | Ensure AI features are usable by all, including people with disabilities [18] |

Ethical considerations must be baked into every stage of the AI development process. Clear guidelines, ethical review boards, and ongoing oversight help ensure that AI systems align with societal values. Organizations also need to comply with regulatory frameworks like GDPR, CCPA, and UNESCO's "Recommendation on the Ethics of Artificial Intelligence" (2021), which set global standards for AI ethics [10][12].

The ultimate goal is to ensure AI serves humanity’s best interests. As Ron Schmelzer and Kathleen Walch put it:

"Ethics in AI isn't just about what machines can do; it's about the interplay between people and systems - human-to-human, human-to-machine, machine-to-human, and even machine-to-machine interactions that impact humans." [11]

This perspective highlights how deeply technology is intertwined with human relationships and society. By prioritizing user well-being over engagement metrics and embedding ethical principles into AI design, organizations can create systems that empower users rather than exploit them.

Case Study: How Personos Supports User Control

Navigating the balance between advanced AI capabilities and user autonomy is no small feat, but Personos demonstrates how it can be done effectively. This personality-based communication software combines AI with personality psychology to provide real-time insights that improve communication and help resolve conflicts - without taking control away from the user.

Founded by Christian Thomas and Nick Blasi, Personos is designed to keep users firmly in charge of their AI-driven interactions. This case study highlights how Personos' features align with the ethical principles and focus on user autonomy discussed earlier.

Features That Prioritize User Control

Personos builds its platform around the idea that AI should assist rather than dictate. Its features are designed to put users in charge, offering tools that emphasize human decision-making over algorithmic authority.

- Personalized Conversational AI: Instead of making decisions for users, the platform provides personality-based insights to help users improve their communication skills. These insights are designed to guide, not to command.

- Dynamic Personality Reports: These reports deliver actionable insights into communication patterns and personality dynamics. Users can interpret and apply the information in ways that suit their needs, ensuring flexibility and personal agency.

- Proactive Communication Prompts: Suggestions based on personality insights are offered as optional tools. Users can choose to follow or ignore them, keeping the guidance as a supportive element rather than a directive.

- Relationship and Group Analysis Tools: These tools help users understand group dynamics and relationships by providing clear, digestible insights. Teams and individuals can analyze the data themselves, draw their own conclusions, and decide how to act on the information to improve collaboration or personal growth.

Transparency is a key part of Personos' design. By clearly explaining how personality psychology informs its recommendations, the platform empowers users to evaluate the relevance and accuracy of the insights it provides.

Privacy and User-Focused Design

Privacy concerns are top of mind for many users, with 68% of consumers globally worried about their online privacy and 57% viewing AI as a potential threat to it [21]. Personos addresses these concerns with a strong commitment to privacy and user control.

The platform follows a privacy-by-design philosophy, embedding data protection measures right from the start of its development. All personality insights and communication analyses are accessible only to the user, ensuring that sensitive information remains private. Users have full control over their data, with the ability to access, modify, or delete it through straightforward privacy settings.

To further safeguard user information, Personos employs end-to-end encryption and strict access controls, ensuring that data stays secure from unauthorized access [20]. This approach not only protects user data but also reinforces trust, ensuring that users feel confident in their interactions with the platform.

Conclusion: Balancing Control and AI's Potential

AI-powered conversational tools have a profound impact on user autonomy, and this impact largely depends on how they're designed. When developers prioritize user empowerment over purely commercial goals, these tools can either enhance or limit a user's ability to make decisions.

The most effective AI systems walk a fine line. They leverage AI's growing capabilities - like the 92% surge in chatbot usage since 2019 and their role in managing 65% of B2C communications - while ensuring users remain in control of their interactions [24]. This approach fosters trust and creates technology that genuinely empowers people. Striking this balance is vital, especially as design choices increasingly influence decision-making.

Transparency and user control are at the heart of AI's success. When users understand how systems work and have the ability to manage their interactions, engagement becomes more meaningful. For instance, 80% of consumers prefer having the option to switch to human agents when interacting with AI [24].

"We now have machines that can mindlessly generate words, but we haven't learned how to stop imagining a mind behind them" [22].

This quote highlights our tendency to attribute human qualities to AI, which can increase the risk of manipulation. That’s why ethical design is so important. The goal should be to create AI systems that support human decision-making, not replace it. This means avoiding features that mislead users and focusing on those that encourage informed choices.

Key Takeaways

Moving forward, developers and users must work together to ensure AI enhances human abilities without compromising judgment. The ideal tools provide insights and options, leaving final decisions in the hands of users. Platforms like Personos exemplify this approach.

Here’s what’s essential:

- Transparency about how systems function

- Meaningful user control over AI interactions

- Ongoing oversight to address bias and prevent manipulation

By embracing these principles, we can build tools that prioritize user empowerment and trust. As Demis Hassabis, CEO of DeepMind, aptly states:

"We're transitioning from narrow AI to systems that can genuinely understand and interact with the world in meaningful ways" [23].

Achieving a balance between advanced AI capabilities and user autonomy demands careful design and constant vigilance. Users deserve AI that respects their independence, serves their needs, and enhances their lives - not systems designed solely to boost engagement or profits.

FAQs

How does AI impact decision-making, and what are the risks of relying on it too much?

AI has become a powerful tool in decision-making, capable of analyzing vast amounts of data, spotting patterns, and providing recommendations that can boost both efficiency and accuracy. But it’s not without its challenges. Over-relying on AI can chip away at critical thinking, reduce human oversight, and even lead to a gradual loss of essential skills.

There’s also the risk of becoming too dependent on AI, which can result in complacency and mistakes - especially when the reasoning behind AI decisions isn’t clear or easy to explain. Striking the right balance between AI's strengths and human judgment is crucial for making thoughtful and responsible decisions.

What ethical factors should organizations consider when using AI for personalized interactions?

To build trust, organizations need to emphasize transparency by openly explaining how they collect and use user data. This clarity helps users feel more secure and informed. Equally important is adopting strong privacy and security measures to protect personal information and prevent any misuse. Beyond that, following ethical AI practices - like reducing bias and promoting fairness - can help prevent discriminatory results and reinforce user confidence. By staying committed to these values, organizations can encourage trust and ensure AI-driven personalization is used responsibly.

How can users stay in control of their AI interactions while fully leveraging its benefits?

To maintain control over AI interactions, it's important for users to establish clear rules about how their data is handled and define the AI's role. For instance, tweaking privacy settings and outlining specific tasks the AI is allowed to assist with can go a long way in preserving autonomy.

On top of that, opting for tools that are upfront about their operations and offer structured, user-driven conversation flows keeps users informed and actively involved. These measures allow individuals to benefit from AI's capabilities while ensuring they stay in charge of their choices.