Top AI Tools for Emotional Trigger Detection

Compare eight AI platforms that detect emotional triggers across text, voice, and video—covering features, integrations, real-time detection, and privacy.

Top AI Tools for Emotional Trigger Detection

AI tools can now detect emotional triggers in real-time, helping to manage disputes, improve communication, and identify hidden emotional shifts. Here's a quick look at the best tools available:

- Personos: Focuses on personality-based insights using text input. Integrates with Slack and Teams for seamless workflow support.

- Hume: Specializes in voice and facial analysis across multiple dimensions. Supports integration with SDKs and APIs.

- Imentiv AI: Combines facial expressions, vocal tones, and text for precise emotional analysis.

- CloudTalk: Designed for call centers, it detects sentiment shifts during phone conversations.

- Youper: A mental health chatbot that uses text-based Cognitive Behavioral Therapy (CBT) exercises.

- Woebot: A text-based mental health companion with real-time emotional detection.

- Lexalytics: Analyzes unstructured text like emails and reviews for emotional and contextual insights.

- Affectiva: Uses video and voice to analyze facial expressions and vocal tones, backed by a massive emotion database.

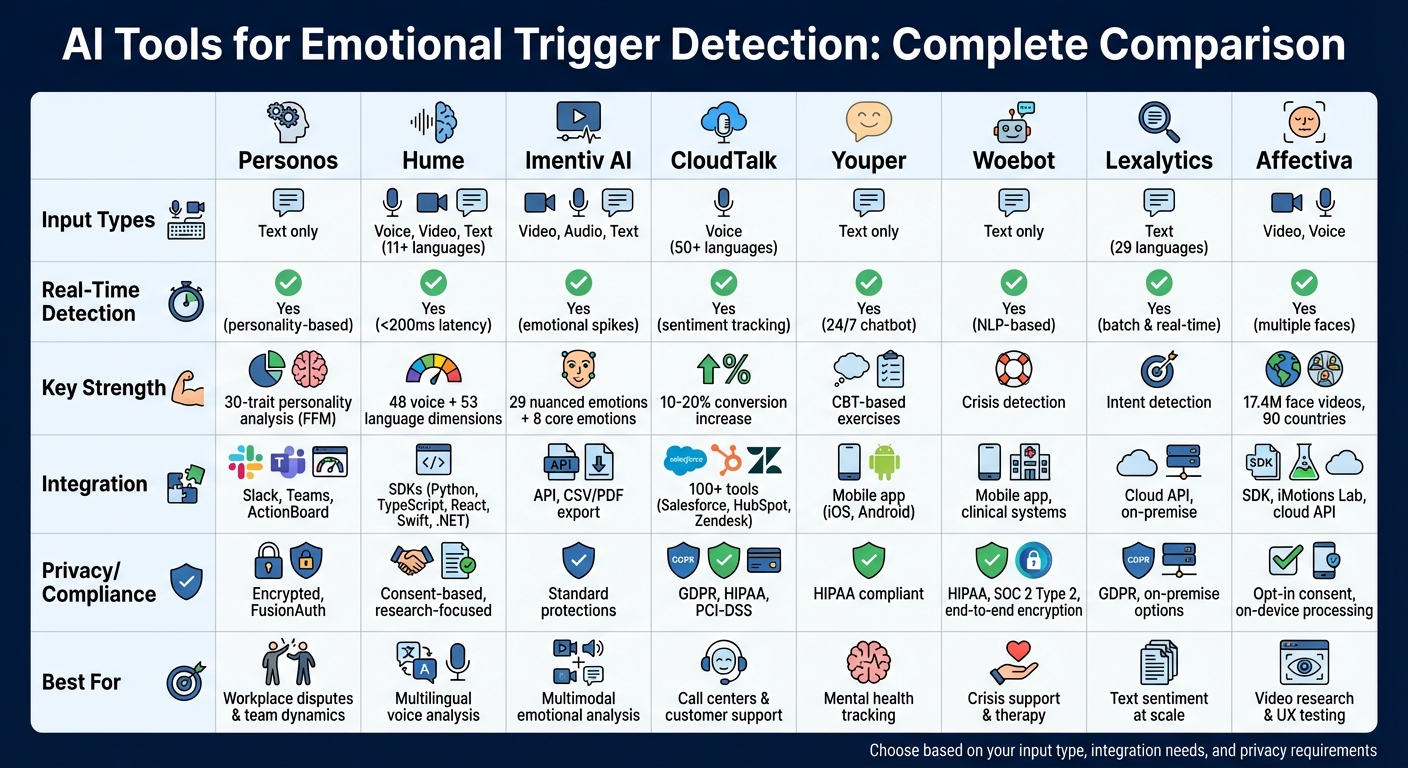

Quick Comparison:

| Tool | Input Type | Key Feature | Integration Options | Privacy Focus |

|---|---|---|---|---|

| Personos | Text | Personality-based insights | Slack, Teams | Encrypted, private chats |

| Hume | Voice, Video, Text | Multidimensional emotional analysis | SDKs, APIs | Consent for voice use |

| Imentiv AI | Video, Audio, Text | Multimodal emotional snapshots | API | Standard protections |

| CloudTalk | Voice | Real-time sentiment during calls | CRM integrations | GDPR compliant |

| Youper | Text | CBT-based emotional tracking | Mobile app | HIPAA compliant |

| Woebot | Text | Crisis detection and therapeutic tools | Mobile app | End-to-end encryption |

| Lexalytics | Text | Detailed sentiment and intent analysis | Cloud API, on-premise | GDPR support |

| Affectiva | Video, Voice | Real-time facial and vocal emotion tracking | SDKs, iMotions Lab | Consent-based model |

These tools offer practical solutions to detect emotional triggers, whether it's through text, voice, or video. Choosing the right one depends on your needs, such as input type, integration preferences, and privacy requirements.

AI Emotional Trigger Detection Tools Comparison Chart

I Built an AI Coach with Hume AI That Reads Emotions! (n8n & telegram)

1. Personos

Personos blends personality psychology with AI to identify emotional triggers using the Five Factor Model (FFM) [4].

Input Modality (Voice/Video/Text)

This platform works entirely through text-based input. Users start by completing a quick 5-minute FFM assessment, which evaluates 30 traits on an 80-point scale [2][3]. Afterward, you can engage with "Personos Chat" to describe disputes or situations as they happen. To provide more context, users can upload materials like coaching notes, job descriptions, or relationship histories [2]. For added convenience, you can incorporate relevant personality profiles into conversations by typing "@" followed by the person’s name, instantly pulling in their data [2]. This setup allows for accurate, real-time emotional trigger detection.

Real-Time Emotional Trigger Detection

Personos evaluates personality traits, situational context, and relationship dynamics [2][3]. When a conflict is described in the chat, the AI uses personality profiles to predict potential friction points before they escalate. The "Relationship Reports" feature sheds light on how two personalities interact, pinpointing areas where communication styles might clash [2]. Additionally, the system offers "Personos Prompts" - short, actionable suggestions that pop up during sensitive conversations to help you defuse tension before it intensifies [2].

"Delivering real-time personality insights tailored to specific situations was not possible until recent advancements in AI." - Personos [3]

Integration with Workflows

Personos integrates seamlessly with Slack and Microsoft Teams, embedding personality-based insights directly into your daily workflows [2][3]. The "ActionBoard" feature turns AI insights into actionable tasks via a Kanban board. With just one click, you can transform chat outputs, report sections, or prompts into measurable action items [2]. This feature ensures that emotional triggers are not only understood but also addressed with structured follow-ups.

Privacy and Data Security Features

Personos prioritizes user privacy by never sharing raw personality scores with others, including leadership. Instead, it offers contextual advice like “considering their high level of creativity, involve them in brainstorming” [3]. All personality data is encrypted, and user authentication is managed via FusionAuth [3]. Chats remain private [2], and the system includes safeguards to detect and prevent misuse. If malicious behavior is identified, the AI halts content generation and may remove users who repeatedly violate guidelines [3].

2. Hume (Empathic Voice Interface)

Hume's Empathic Voice Interface (EVI) is designed to interpret emotional states by analyzing speech prosody and vocal cues. Unlike systems that focus solely on basic emotions, EVI measures expression across 48 dimensions for voice and prosody and 53 dimensions for emotional language, identifying nuanced states like "Empathic Pain" or "Aesthetic Appreciation" [7][9].

Input Modality (Voice/Video/Text)

EVI processes voice inputs using a unified speech-to-speech model, delivering both language and expressive vocalizations in real time [7][10]. Beyond voice, the API supports video for analyzing facial expressions and text for assessing emotional language [6][9]. The EVI 4-mini model recognizes input in 11+ languages, including English, Spanish, Japanese, Korean, French, Portuguese, Italian, German, Russian, Hindi, and Arabic [7]. This multilingual capability ensures precise emotional detection across diverse user bases.

Real-Time Emotional Trigger Detection

EVI leverages WebSockets to monitor and stream emotional state changes, such as frustration, sadness, or excitement, with impressive speed. Its Octave 2 model detects vocal cues with a latency of under 200ms, allowing for swift adjustments in tone and response [5][8]. The system is designed to be interruptible, pausing instantly when a user interjects and resuming seamlessly while maintaining the appropriate emotional context [7].

"The voice is even richer with nonverbal cues than facial expressions. We pioneered decoding subtle qualities in speech prosody: intonation, timbre, and rhythm." - Hume AI [6]

Integration with Workflows

Developers can integrate EVI into their applications using official SDKs for Python, TypeScript, React, Swift, and .NET. It also connects with major language model providers like Anthropic, OpenAI, and Google [7][8][10]. For specialized needs, the Custom Models API supports transfer learning [9]. A notable example: in 2025, Niantic incorporated Hume AI to create spatially aware AI companions with emotional intelligence, enhancing interactions within their games [5][8].

Privacy and Data Security Features

Hume prioritizes ethical practices, requiring explicit consent for features like voice cloning [10]. Operating as a research-focused platform, it aims to align AI development with human goals and emotional well-being [10]. Subscription plans cater to different needs, with Business and Enterprise tiers offering higher request capacities - standard HTTP requests are capped at 100 per second [7].

3. Imentiv AI

Imentiv AI takes emotional analysis to the next level by combining insights from video, audio, and text to create a complete emotional snapshot. Unlike other platforms that focus on a single input type, Imentiv processes facial micro-expressions, vocal tones, and linguistic patterns simultaneously. This multimodal approach helps identify emotional triggers with remarkable precision, offering insights into how people feel and react in real-time scenarios [12][13]. For audio, it uses Speech Emotion Recognition (SER) to detect eight core emotions: Happy, Sad, Angry, Fear, Surprise, Disgust, Boredom, and Neutral [11]. On the text side, it digs deeper, identifying 29 nuanced emotions like curiosity, hesitation, and confusion in every sentence [11]. Here's how the platform works across different input types.

Input Modality (Voice/Video/Text)

Imentiv AI supports video, audio, and text inputs, making it versatile for various use cases [12]. For audio and video files, the platform includes speaker diarization, which separates and analyzes individual voices in group settings such as meetings, interviews, or podcasts. This ensures accurate emotional analysis for each participant [11]. Users can upload files directly or even analyze YouTube videos by providing URLs. Once the analysis is complete, results can be exported as CSV files or detailed PDF reports, making it easy to share or integrate findings into other workflows [13][15].

Real-Time Emotional Trigger Detection

Imentiv AI doesn’t just analyze emotions - it tracks them in real time using the Valence-Arousal model, which measures emotional intensity and positivity. The system highlights "emotional spikes", pinpointing moments when emotions peak. For example, it can identify when engagement dips during a webinar or when stress levels rise during a customer support call. Its "AI Insights" feature, powered by large language models, allows users to interact with their data directly. Questions like "What caused the drop in engagement?" or "When did frustration peak?" can reveal specific emotional triggers [11][12].

"It's not just analytics. It's emotion insight powered by empathy." - Imentiv AI [12]

Integration with Workflows

To make emotional analysis seamless, Imentiv AI offers an Emotion API that integrates directly into CRMs, research tools, or custom workflows [11][13]. This allows businesses to monitor buyer hesitation or customer frustration in real time without juggling multiple platforms. For added reliability, the platform includes a "Psychologist's Review" feature, where human experts validate the AI's findings and provide deeper behavioral insights [14]. Organizations needing customized API setups can also access tailored pricing and support [11].

4. CloudTalk

CloudTalk zeroes in on voice-based emotional trigger detection during phone calls, making it a go-to solution for customer support and sales teams. By analyzing both the content of conversations and the way they’re delivered - tracking tone, pitch, and speech patterns - CloudTalk identifies emotions like frustration and anger in real time. It pairs this emotional analysis with natural language processing (NLP) to transcribe conversations, creating a powerful tool for improving customer interactions.

Input Modality (Voice)

CloudTalk processes voice input from inbound and outbound calls, seamlessly converting spoken words into searchable text. With support for over 50 languages, including English, German, French, Spanish, and Portuguese, it’s a versatile platform for global teams. Features like automated tagging and call summaries save agents around 5 minutes per call, freeing them up to focus on resolving customer issues.

Real-Time Emotional Trigger Detection

By breaking conversations into segments, CloudTalk tracks emotional shifts separately for both agents and callers. It categorizes sentiment into three levels - Positive, Neutral, and Negative - using a visual timeline marked by red, yellow, and green indicators. Companies using these insights have reported impressive results, such as a 10–20% increase in conversion rates and a 30% improvement in collections.

"CloudTalk's AI transcripts and analytics make it easier to track talk ratios, discovery depth, and regional performance - all without requiring formal training to get started."

– Marta Santos, SDR Team Lead, Pipedrive

Integration with Workflows

CloudTalk integrates seamlessly with over 100 popular tools, including CRMs like Salesforce, HubSpot, and Pipedrive, as well as helpdesk platforms like Zendesk, Freshdesk, and Intercom. Sentiment scores and call summaries sync automatically, cutting out manual data entry. Users can also set up keyword monitoring to trigger Slack alerts for compliance issues or emotionally charged moments. The AI Conversation Intelligence feature is available as an add-on for $9 per user per month (billed annually), while base plans start at $25 per user per month.

Privacy and Data Security Features

CloudTalk prioritizes data security, adhering to industry standards like GDPR, HIPAA, and PCI-DSS. All call recordings and transcripts are encrypted, with strict access controls limiting who can view sensitive information. Automated audit logs track data access in real time, and users can disable sentiment analysis to stop data collection immediately. With over 1,660 reviews on G2 and an average rating of 4.3 out of 5 stars, CloudTalk is praised for its easy integrations and accurate transcriptions, though some users have mentioned occasional call quality issues in certain regions.

5. Youper

Youper takes a distinct approach to mental health support by focusing solely on text-based interactions. Founded in 2015 by a psychiatrist, this platform uses an AI-powered chatbot to identify emotional triggers in real time, making it a valuable tool for emotional well-being. Designed for healthcare providers, employers, insurers, and life science companies in regulated industries, Youper has earned widespread trust, reflected in over 60,000 5-star reviews.

Input Modality (Text)

Youper’s functionality revolves around text-based communication, offering daily check-ins through interactive Cognitive Behavioral Therapy (CBT) exercises. These exercises help users track changes in anxiety and mood, all without the need for audio or video, making it easy to use on any device.

Real-Time Emotional Trigger Detection

The platform provides 24/7 support through its AI chatbot, which uses CBT techniques to identify emotional triggers and continuously monitor mental health metrics. For users requiring more than self-guided tools, Youper connects them with behavioral coaches for weekly sessions or psychiatrists for medication management.

"Youper is available on your own time and schedule wherever and whenever needed." – Youper

This round-the-clock accessibility helps users seamlessly incorporate mental health support into their daily routines.

Integration with Workflows

Youper offers tailored solutions for both individual users and businesses. Through its "For Business" and "For Users" programs, the platform integrates emotional health tools into workplace wellness initiatives and personal care plans. All content and methodologies are reviewed by medical professionals like Dr. Hamilton, a psychiatrist, ensuring they meet clinical standards. This makes Youper a practical choice for environments where managing emotions is essential for effective teamwork and communication.

Privacy and Data Security Features

Youper is built with strict privacy measures to safeguard sensitive information, adhering to the requirements of highly regulated industries. Designed to meet the needs of healthcare providers and employers, the platform ensures compliance with standards for handling protected health information. Headquartered in San Francisco, CA, Youper follows robust security protocols to manage and store behavioral health data responsibly.

sbb-itb-f8fc6bf

6. Woebot

Woebot stands out as a text-based AI mental health companion, praised for its approach to providing accessible support through mobile devices [18].

Input Modality (Text)

Woebot communicates entirely through text-based conversations, making it ideal for users who prefer a simple chat interface. There’s no need for video calls or voice recordings - just type and share your thoughts or experiences [16][17].

Real-Time Emotional Trigger Detection

Using natural language processing (NLP), Woebot analyzes user input in real time to identify emotional states. It then matches these emotions with therapeutic techniques tailored to the moment [17]. This NLP framework was developed in collaboration with clinical psychologists and is continuously refined to maintain accuracy [17].

"Woebot gives users the opportunity to share about what they're feeling, and then leverages natural language processing to interpret those inputs and identify which expert-crafted technique would be most beneficial in that moment." – Woebot Health [17]

The platform also includes crisis detection capabilities. If concerning language is detected, Woebot quickly provides links to external resources, ensuring users get the help they need when it matters most [17].

Integration with Workflows

Woebot’s real-time insights integrate smoothly into clinical workflows, making it a handy tool for healthcare providers. For example, Hackensack Meridian Health incorporates Woebot for Adults into their patient care systems. This allows patients to monitor and manage their moods between therapy sessions through features like mood tracking, gratitude journaling, and mindfulness exercises - all available 24/7 [16].

Privacy and Data Security Features

User privacy is a top priority for Woebot. All data is treated as Protected Health Information (PHI) and complies with HIPAA Privacy and Security Rules [17]. The platform has completed a SOC 2 Type 2 examination with zero exceptions, showcasing its dedication to maintaining high security standards [17].

"We do not share or sell user data to advertising companies or for advertising purposes. We didn't do it when we introduced Woebot six years ago. We don't do it now. We will never do it." – Woebot Health [17]

To ensure safety and compliance, Woebot operates a Safety Assessment Committee composed of clinical and regulatory experts. Sensitive data is stored in a secure environment with strict access controls, and the platform undergoes annual external assessments to uphold its security measures [17].

7. Lexalytics

With nearly two decades of development behind it [19], Lexalytics specializes in extracting emotional and contextual insights from unstructured text like emails, surveys, reviews, and chat logs. For organizations that rely heavily on written communication, this platform transforms every interaction into a clearer understanding of underlying sentiments.

Input Modality (Text)

Lexalytics focuses exclusively on text, processing everything from social media posts and customer reviews to Slack messages and other written content. It supports 29 native languages, offering extensive text analysis capabilities [19]. In recognition of its expertise, Lexalytics was named the "Best Overall NLP Company" at the 2023 AI Breakthrough Awards [19].

Real-Time Emotional Trigger Detection

The platform goes beyond basic sentiment analysis, identifying complex emotional triggers using a combination of rules-based sentiment libraries and machine learning [19]. Instead of just labeling text as positive or negative, it delivers detailed sentiment scores for specific entities, topics, and themes. Additionally, Lexalytics can detect intentions - like whether someone is expressing interest in buying, quitting, recommending, or complaining. Its Semantria API processes hundreds of millions of documents daily to provide these insights [20].

Integration with Workflows

Lexalytics offers three main products to meet diverse needs: Salience, an on-premise NLP library; Semantria, a cloud-based RESTful API; and Spotlight, a web-based visualization tool [19]. These solutions can be deployed on-premise or across private, hybrid, and public cloud environments. For industries like Pharma, Retail, and Hospitality, pre-built Industry Packs further enhance sentiment analysis accuracy [19]. These flexible integration options make it easier for businesses to incorporate text analysis into their workflows.

Privacy and Data Security Features

For organizations with strict data security requirements, Lexalytics provides the Salience library for on-premise deployment, ensuring sensitive information remains internal [19]. It also supports private and hybrid cloud setups, offering additional layers of control and security [19].

8. Affectiva

Affectiva leverages 18 years of emotion research, supported by the world’s largest emotion database, which includes 17.4 million face videos and 8 billion facial frames gathered from 90 countries [22]. This extensive dataset forms the backbone of its cutting-edge analysis capabilities.

Input Modality (Video and Voice)

Using webcams or video feeds, Affectiva examines facial expressions, identifying seven core emotions - anger, contempt, disgust, fear, joy, sadness, and surprise - along with more nuanced states like sentimentality and confusion [23][25]. Beyond facial analysis, the platform incorporates voice analysis, detecting emotional states through vocal tones and acoustic patterns [21]. With its integration into iMotions, Affectiva can also monitor eye movements and respiration using standard webcams [21].

Real-Time Emotional Trigger Detection

Affectiva's AFFDEX 2.0 toolkit is capable of analyzing multiple faces in real-time, tracking over 20 facial Action Units and broader metrics like attention levels and blink rates [23][25]. Trusted by 90% of the world’s largest advertisers and 26% of Global Fortune 500 companies, its technology has been cited in more than 7,000 academic papers [22][25]. For UX researchers, the platform synchronizes visual attention with facial expressions, helping to identify moments of emotional frustration with precision [21].

Integration with Workflows

The platform offers a cross-platform SDK for Windows and Linux, which operates locally without requiring internet access, as well as a cloud-based API for batch processing [24][25]. Developers can use the Emotion SDK to embed emotion recognition into their applications, while integration with the iMotions Lab suite allows researchers to combine facial coding with biosensors like EEG, GSR, and ECG [21][22]. These tools provide flexibility for a wide range of applications.

Privacy and Data Security Features

Affectiva prioritizes user privacy through an opt-in, consent-based model for data collection, complemented by detailed cookie management controls [22][24]. Since the Facial Coding SDK can run entirely on-device, sensitive data remains secure and does not need to leave the local environment [25]. The company also leads a "Building Trust in AI" initiative, focusing on ethical practices and data transparency [22].

Feature Comparison Table

Selecting the right emotional trigger detection tool depends on your specific needs, including the type of data you're working with, how quickly you need results, and the privacy standards your organization upholds. These tools differ in their inputs, detection speed, methods, integration options, and privacy measures.

Here’s a quick breakdown of how these tools compare across key features like input types, real-time detection, trigger identification, integration, and privacy. Notably, Personos stands out for its focus on personality-based insights rather than raw emotion detection.

| Tool | Input Types | Real-Time Detection | Trigger Identification | Integration Options | Privacy & Security |

|---|---|---|---|---|---|

| Personos | Text (conversational AI) | Yes | Personality-based insights | Web platform, task tracking | Privacy-focused (interactions visible only to the user) |

| Hume | Face, voice, text | Yes (WebSockets) | Multi-dimensional vocal and facial analysis | REST API, SDKs (Python, React, Swift, .NET) | Free tier available; consent protocols for voice features |

| Imentiv AI | Video, audio, text | Yes | Psychological analysis across multiple modalities | API integration | Standard data protection |

| CloudTalk | Voice (call audio) | Yes | Sentiment analysis during customer calls | CRM integrations, API | GDPR compliant |

| Youper | Text (chat-based) | Yes | Mental health triggers and mood patterns | Mobile app (iOS, Android) | HIPAA compliant; encrypted data |

| Woebot | Text (conversational) | Yes | Cognitive behavioral patterns and emotional distress | Mobile app, messaging platforms | End-to-end encryption; research-backed privacy |

| Lexalytics | Text | Batch and real-time | Sentiment themes and entity-level emotions | Cloud API, on-premise deployment | Customizable data retention; GDPR support |

| Affectiva | Face, voice, biosensors | Yes (multiple faces simultaneously) | 7 core emotions, 20+ facial Action Units, and attention metrics | SDK (Windows, Linux), cloud API, iMotions Lab integration | Opt-in consent model; on-device processing available |

When deciding, consider your input data, the speed at which you need results, and how well the tool integrates with your existing systems. Privacy and security features should also align with your organization's guidelines.

Conclusion

Emotions play a crucial role in shaping nearly every interaction we have. These AI tools offer a unique window into what's happening beneath the surface - whether it's picking up on stress in someone's voice, identifying subtle manipulations, or catching the exact moment a conversation starts to escalate.

The real game-changer lies in turning insights into action. Frustration or awareness on their own won't solve anything unless paired with actionable steps. Tools like Personos take personality data and transform it into practical, trackable tasks, helping you move from understanding to measurable progress [2]. By analyzing how two distinct personalities interact across 30 different traits, these tools provide tailored strategies for managing specific dynamics - not just one-size-fits-all advice.

"By surfacing how the system arrived at the answer... if you choose to take action you can do so with confidence." – Personos [2]

Beyond insights, real-time detection brings the ability to act quickly. When AI tracks conversational flow and flags escalation patterns, it allows you to step in before small irritations spiral into full-blown conflicts [1]. Alerts that catch mismatches - like when someone says "I'm fine" but their tone says otherwise - reveal hidden tensions that might otherwise go unnoticed.

What makes these tools so effective is their objective feedback in moments clouded by emotions. Real-time emotional detection has already shown impressive results in improving conflict resolution and negotiation outcomes. Whether you're managing workplace disagreements, navigating personal relationships, or guiding others through tricky situations, AI-powered emotional detection provides the clarity you need. By incorporating these tools, professionals gain sharper emotional awareness and the ability to resolve conflicts proactively.

FAQs

How do AI tools identify emotional triggers during conversations?

AI tools can now pick up on emotional triggers in real time by analyzing language, tone, and behavior during interactions. Using natural language processing (NLP), these tools detect changes in sentiment, specific keywords, and patterns in written communication, like emails or chat messages. When it comes to voice or video, they go a step further - analyzing speech patterns, tone, and even facial expressions to spot signs of frustration, anger, or anxiety.

By combining these observations with personality psychology models, the AI identifies potential triggers - such as a misunderstanding or a perceived slight. It then provides tailored, context-sensitive feedback. This might include suggesting alternative phrasing, offering calming prompts, or sharing personality-based insights. All of this happens seamlessly, allowing users to address and defuse sensitive moments without disrupting the flow of the conversation.

How do AI tools ensure privacy when detecting emotional triggers?

AI tools that detect emotional triggers take user privacy seriously, incorporating strong protective measures. Take Personos, for instance - it guarantees that all interactions stay confidential and are accessible only to the user, creating a secure space for discussing sensitive matters.

These tools typically employ safeguards like end-to-end encryption, secure local or encrypted cloud storage, and stringent data-handling protocols. Many also minimize data retention and adhere to U.S. privacy laws, such as the California Consumer Privacy Act (CCPA), ensuring users maintain full control over their personal data.

How can emotional detection insights improve workflows?

Emotional detection insights can streamline workflows by weaving AI-driven emotional cues into the tools teams already rely on - like email, chat platforms, CRM systems, or case management software. These cues, such as sentiment scores or emotional triggers, can be attached as metadata within interactions, empowering teams to respond more thoughtfully and in a timely manner.

Take this for instance: real-time alerts can flag conversations that hit a certain negativity level, prompting managers to step in with de-escalation techniques or coaching advice. Emotional data can also be timestamped to highlight spikes during critical moments, offering valuable insights for analysis and better decision-making. On a larger scale, aggregated dashboards can reveal emotional trends across interactions, enabling organizations to fine-tune communication strategies, address sensitive cases more effectively, or enhance training programs.

By embedding these insights directly into existing workflows, businesses can turn raw emotional data into practical strategies, improving communication, resolving conflicts, and driving better outcomes overall.