Future of AI Coaching: Ethical Sustainability Trends

Examines ethical, privacy, and environmental challenges in AI coaching and practical trends—human oversight, inclusive design, and greener model strategies.

Future of AI Coaching: Ethical Sustainability Trends

AI coaching has transformed how people approach personal and professional development. It’s no longer just about reminders or task management - AI tools now assist with complex tasks like goal-setting, self-reflection, and team dynamics. But with this progress comes a need to address ethics, privacy, and environmental impact. Here’s what you need to know:

- AI Coaching Evolution: From basic tools to advanced systems using language models, AI now supports coaches without replacing them. It’s made coaching more accessible across all job levels.

- Ethical Challenges: Bias, privacy concerns, and unequal access are major hurdles. Frameworks like the 2024 ICF AI Coaching Framework aim to tackle these issues.

- Sustainability Concerns: AI’s energy and water consumption are significant, especially during model training and usage. Companies like Google are reducing these impacts through energy-efficient practices.

- Human Oversight: AI tools can't replace the depth of human judgment in coaching relationships. They’re best used to handle tasks that free up professionals to focus on building trust and providing support.

- Affordability and Access: Tools like Personos offer affordable, privacy-focused solutions that empower both users and coaches.

AI coaching has potential, but ethical and environmental considerations must remain a priority to ensure it benefits everyone equally.

AI, Ethics, and Sustainability: From Principles to Practice

sbb-itb-f8fc6bf

Ethical Principles in AI Coaching

As AI coaching systems evolve, a pressing question arises: Can technology created by humans ever be free from human bias? Alicia Hullinger encapsulates this challenge perfectly:

Can a creation of humanity remain untouched by its creator's biases? [5]

This question underscores the importance of ethical design in AI coaching. Using the AI Coaching Framework [1], the industry addresses key ethical areas such as foundational ethics, relationship dynamics, and technical concerns like privacy and data security. The aim is clear: to create AI tools that enhance coaching while safeguarding professional integrity and client safety. Tackling bias and fostering inclusivity are central to this mission.

Reducing Bias and Designing for Inclusion

Bias in AI systems stems from two main sources: the ethical biases of developers and the use of non-representative training data [5]. To combat this, developers are engaging diverse groups of coaches and clients to create datasets that better reflect a variety of demographics and experiences. This approach helps ensure fairness across different contexts.

Professional coaches play a pivotal role here by participating in human-in-the-loop feedback. By reviewing AI interactions and offering direct input, they help identify and address biases that automated systems might overlook [5]. These efforts not only reduce bias but also support the ethical sustainability of AI in coaching. Additionally, organizations are testing AI tools across various cultural settings before launch, using structured testing protocols to confirm sensitivity and usability [6]. The Designing AI Coach (DAIC) model provides a framework that prioritizes transparency, predictability, and ethical data practices.

Accountability is another critical aspect. Emerging frameworks emphasize shared responsibility between AI systems and their creators for unintended negative outcomes. This shared liability ensures developers remain accountable and can’t deflect responsibility when harm occurs. Transparency and strong human oversight are also essential to build trust and maintain ethical standards in AI coaching.

Transparency and Human Oversight

Transparency is key to trust, but it requires users to fully understand how AI systems operate using personality psychology. AI coaching tools don’t just mirror user inputs - they build their own profiles of user behavior and preferences [4]. This distinction matters. Users need to know they’re interacting with an artificial system that interprets their data, not a neutral tool.

A 2023 survey of 205 coaching professionals highlighted transparency and data privacy as top concerns. Interestingly, frequent AI users reported a higher level of ethical awareness compared to occasional users [2]. Clear communication about how AI systems make decisions, what data they collect, and their role in the coaching process is not optional - it’s essential. This transparency allows users to critically evaluate AI suggestions instead of accepting them at face value [4].

AI excels at supporting tasks like goal-setting and reflection but lacks the clinical judgment required for deeper, long-term relationships [3][5]. As Hullinger points out:

An AI chatbot will not know that it may need to refer the client to therapy or another service [5].

This limitation underscores the importance of human oversight. The International Coaching Federation (ICF) mandates that AI-generated content must be reviewed and adjusted by human professionals before it’s used in client interactions [5]. Tools like the AI Coaching Standard Self-Scoring Worksheet help organizations evaluate whether their systems align with established ethical guidelines [6].

Between 2019 and 2022, the coaching industry grew by 54%, reaching around 109,200 practitioners globally, with revenues hitting $4.56 billion in 2022 [7]. As the field continues to expand, adhering to ethical principles like transparency and human oversight will determine whether AI coaching broadens access fairly or exacerbates existing inequalities.

Sustainability Trends in AI Coaching

Environmental Impact of AI Systems

The environmental toll of AI coaching extends well beyond the energy it takes to train models. For instance, training a large AI model can release as much carbon as five cars produce over their entire lifetimes. But the real energy drain happens during the inference phase - the stage when users interact with AI coaches. This phase can account for a staggering 60% to 90% of a model's total energy use over its lifecycle [9]. To put it into perspective, running a ChatGPT-like service for just one year generates 25 times the carbon emissions of the model's initial training [9].

Even a single interaction with an AI assistant consumes about 10 times the electricity of a Google Search. Over time, 10 to 50 interactions can rack up a water footprint of approximately 500 mL (around 17 fl oz), largely due to the cooling of data centers and electricity production [9]. Interestingly, indirect water usage - like the water involved in generating electricity - makes up over 80% of this footprint [9].

Microsoft reported a 34% surge in global water consumption from 2021 to 2022, driven by the cooling demands of its growing AI operations [9]. And this trend isn’t slowing down. By 2026, electricity consumption by data centers is expected to more than double, rising from 460 TWh in 2022 to 1,050 TWh [10][12]. Golestan (Sally) Radwan, Chief Digital Officer at the United Nations Environment Programme, highlighted the stakes:

We need to make sure the net effect of AI on the planet is positive before we deploy the technology at scale [8].

The environmental impact doesn’t stop at energy and water. The short lifespan of high-performance GPUs used in AI systems contributes to mounting electronic waste. Plus, the production of these GPUs requires scarce materials like lithium and cobalt, adding to the strain on natural resources. Ironically, as efficiency improves, demand often rises, which can end up increasing overall resource consumption [9].

These challenges underline the importance of rethinking how AI systems are built and deployed to ensure their sustainability over time, especially when looking to scale your impact with AI in professional coaching.

Long-Term Viability of Ethical AI Solutions

Creating sustainable AI coaching systems means designing tools that can scale without compromising the planet. A great example of this potential comes from Google Gemini Apps. Between May 2024 and May 2025, Google managed to cut the energy consumption of the median text prompt by a factor of 33 and its carbon footprint by a factor of 44, thanks to software optimizations and clean energy initiatives. By May 2025, each median text prompt required just 0.24 Wh of energy and about 0.26 mL (roughly 0.01 fl oz) of water [11][13].

Location plays a huge role in energy and water efficiency. For example, in 2022, Google’s data center in Finland ran on 97% carbon-free energy. In contrast, their data centers in Asia operated on only 4% to 18% carbon-free energy [9]. This disparity has led to a growing trend of geographical load balancing, where workloads are shifted to regions with abundant renewable energy and minimal water stress. U.S. states like Texas, Montana, Nebraska, and South Dakota are emerging as prime locations because of their renewable energy resources and low water demands [10].

The industry is also transitioning away from "Red AI", which prioritizes accuracy at any cost, to "Green AI", which focuses on efficiency. Smaller, task-specific models are leading the charge, with energy reductions of up to 90% [9]. Techniques like knowledge distillation, pruning, and quantization make it possible to shrink models without sacrificing quality. When combined with grid decarbonization and best practices in efficiency, these approaches can slash AI server carbon emissions by up to 73% and water footprints by 86% [10].

As researcher Aras Bozkurt points out:

The magic of AI is not magic. It is a physical process of computation, heat, water, and carbon, and we must begin to account for it as such [9].

To ensure accountability, organizations using AI coaching tools should demand detailed Environmental Impact Reports from vendors. These considerations are vital when selecting AI tools for helping professionals to ensure they align with ethical and environmental standards. These reports should include metrics like energy use per 1,000 queries and total water footprint [9]. Regulatory efforts like the EU AI Act are already setting sustainability requirements for high-risk AI systems, which could pave the way for global standards. Without such measures, the coaching industry risks scaling a technology whose environmental costs could outweigh its benefits.

Personos: A Model for Ethical and Sustainable AI Coaching

Personos is setting a new standard in AI coaching by balancing ethics, privacy, and sustainability. Its approach ensures user trust while delivering meaningful, data-driven coaching experiences.

Privacy-Focused Personality Insights

At its core, Personos prioritizes privacy. All user interactions and data remain strictly private, visible only to the individual user. This aligns with the ethical guidelines outlined in the ICF Artificial Intelligence (AI) Coaching Framework [1]. Unlike other systems that might share or distribute user data, Personos ensures that every insight stays within the user's control.

By emphasizing informational and decisional privacy, the platform builds trust while providing actionable insights [4][1]. This approach not only protects users from potential confidentiality breaches - a major concern for AI coaching systems - but also adheres to the ICF's principles of being "ethically sound" and technologically responsible [1]. This commitment safeguards user trust and ensures data security, creating a solid foundation for long-term engagement.

Communication Prompts and Group Dynamics

Personos takes AI coaching beyond simple reminders, fostering an ongoing, interactive dialogue with users [4]. Its AI-driven prompts anticipate user behavior, helping bridge the gap between intentions and actions [4].

One standout feature is its group dynamics analysis, which offers detailed reports on team interactions. By identifying areas of friction and suggesting tailored strategies, this tool helps teams collaborate more effectively. For example, the system analyzes personality interactions to recommend precise, actionable interventions for better teamwork. This kind of insight allows coaches to move beyond observation and take informed, impactful actions.

The platform also maintains transparency about how its predictions are generated, ensuring users can critically evaluate the system's suggestions. This transparency helps coaches make decisions with confidence, supported by data that’s both clear and actionable.

Giving Coaches Actionable Data

Personos doesn’t just support users - it also empowers coaches. By providing granular insights on individual and team dynamics, it equips professionals with tools to enhance their practice without replacing their expertise.

A survey of 205 coaching professionals highlighted that while GenAI is widely used for administrative tasks, its role in relational coaching remains limited due to the need for human oversight [2]. Researcher Jennifer Haase notes:

GenAI functions best as an augmentation tool rather than a replacement, emphasizing the need for AI literacy training, ethical guidelines, and human-centered AI integration [2].

Personos embraces this philosophy by complementing human expertise with tools like dynamic personality reports and relationship analysis. These features assist in conflict resolution and team building while ensuring that coaches maintain control over the coaching process [2]. Additionally, at just $9 per seat per month, Personos makes AI coaching affordable, addressing accessibility concerns and helping lower-income users benefit from AI-driven tools [4].

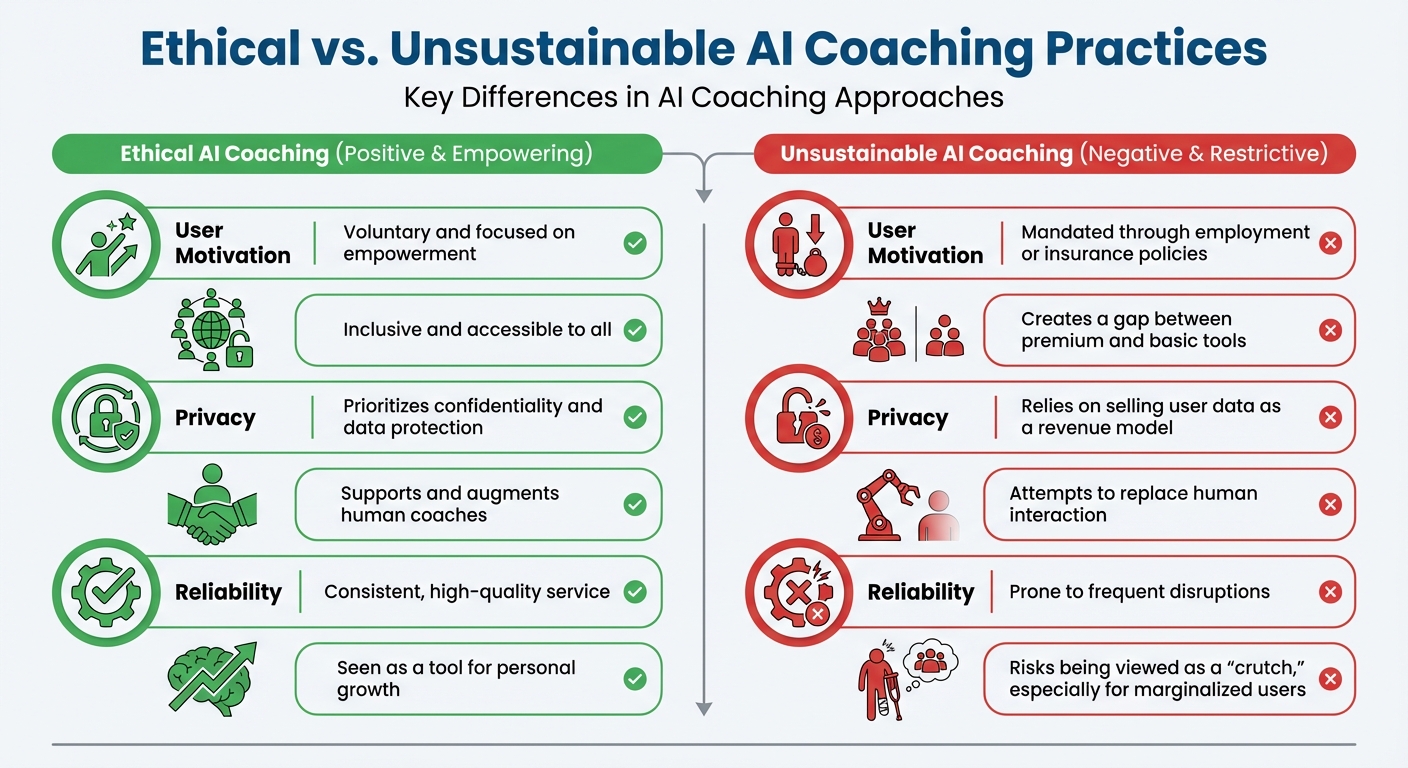

Ethical vs. Unsustainable AI Coaching Practices

Ethical vs Unsustainable AI Coaching Practices Comparison

When we talk about ethical AI in coaching, it’s not just about following a set of rules - it’s about ensuring fairness, inclusivity, and genuine support for all users. On the flip side, unsustainable AI practices often prioritize profit or efficiency at the expense of equity and long-term benefits. The key difference lies in who gains and who gets left behind. Research highlights that AI tools can either level the playing field or deepen societal divides, depending on how they're designed and implemented.

Access and Equity

One major point of contrast is accessibility. Ethical AI coaching prioritizes equal access, ensuring that tools are available to people across all income levels. Unsustainable practices, however, often create a "digital divide", where only those who can afford premium services get access to the best tools. For instance, some programs require users to share sensitive health data, effectively pressuring economically disadvantaged individuals into compromising their privacy.

Voluntary vs. Mandatory Participation

Another difference is the freedom of choice. Ethical AI systems respect user autonomy, allowing individuals to opt in without coercion. On the other hand, unsustainable models often impose participation through tactics like employment contracts that mandate behavioral monitoring or insurance policies that make opting out financially burdensome. This lack of choice can leave users feeling trapped.

The Role of Human Connection

Ethical AI doesn’t aim to replace the human element - it’s designed to enhance it. By handling administrative tasks, AI can free up coaches to focus on building meaningful relationships with their clients. This human connection is crucial for fostering growth and trust. Unsustainable practices, however, often attempt to replace human interaction entirely. A survey of 205 coaching professionals revealed that while generative AI (GenAI) has its uses, it lacks the relational depth needed for effective coaching [2][3]. This underscores the importance of keeping humans at the center of the process.

Ethical vs. Unsustainable Practices: A Quick Comparison

To break it down further, here’s a side-by-side look at how ethical and unsustainable AI coaching practices differ:

| Feature | Ethical AI Coaching | Unsustainable AI Coaching |

|---|---|---|

| User Motivation | Voluntary and focused on empowerment | Mandated through employment or insurance policies |

| Access | Inclusive and accessible to all | Creates a gap between premium and basic tools |

| Privacy | Prioritizes confidentiality and data protection | Relies on selling user data as a revenue model |

| Human Role | Supports and augments human coaches | Attempts to replace human interaction |

| Reliability | Consistent, high-quality service | Prone to frequent disruptions |

| Perception | Seen as a tool for personal growth | Risks being viewed as a "crutch", especially for marginalized users |

These differences highlight the importance of ethical practices that prioritize inclusivity, autonomy, and the preservation of human relationships. Research even suggests that unsustainable models can harm users’ self-confidence - particularly among lower-status groups - undermining the very growth these tools are supposed to promote [4].

Conclusion: Building an Ethical and Sustainable Future for AI Coaching

The decisions we make today will shape the future of AI coaching. According to the 2025 ICF Global Coaching Study, which gathered input from over 10,000 coaches worldwide, the profession is experiencing unprecedented growth and optimism [1]. However, this progress will only hold value if it’s grounded in transparency, inclusivity, and authentic human connection. These principles form the backbone of the ethical frameworks outlined here.

Frameworks like the ICF AI Coaching Framework are essential for setting both ethical and practical standards [1]. These aren’t just guidelines - they represent the foundation of trust in AI coaching. As the International Coaching Federation emphasizes:

By adhering to these standards, stakeholders can contribute to a coaching ecosystem that is ethically sound, technologically advanced, and aligned with ICF's Core Competencies. [1]

AI should serve as a tool to complement human expertise, not replace it. Take Personos, for example. Research shows AI excels in specific areas like goal tracking and self-reflection, but the deeper, long-term aspects of coaching - like building trust and fostering a "working alliance" - remain uniquely human [3]. Platforms like Personos leverage AI for tasks such as managing administrative duties and providing personality insights through psychology, freeing up coaches to focus on meaningful relationships and transformational guidance.

However, without thoughtful oversight, AI coaching could unintentionally widen societal gaps. Risks include unequal access, coercive workplace implementation, and stigmatization of those who need support the most. To prevent this, AI tools must be designed with care - incorporating diverse testing, continuous feedback, and a commitment to social equity. Organizations should prioritize AI literacy training, maintain human oversight, and routinely audit their systems to identify and address bias.

The challenge is clear: we must create AI coaching systems that uplift everyone, not just a select few. The research is already in place, the frameworks are established, and platforms like Personos demonstrate what’s possible when ethics and technology align. Now is the time to take action.

FAQs

How can I tell if an AI coach is biased?

To spot bias in an AI coach, focus on its fairness, inclusivity, and transparency. Pay attention to whether its responses steer clear of stereotypes or show any signs of unfair treatment. Such issues can erode trust and reduce how effective the coaching is. It's also important to evaluate whether the AI encourages balanced and ethical communication throughout its coaching process.

What data should an AI coaching tool collect from me?

An AI coaching tool needs to gather specific data to deliver personalized and ethical support while maintaining strict privacy standards. This includes understanding a client’s goals, preferences, progress, communication habits, personality traits, and behavioral patterns. By using this information, the tool can tailor its coaching to meet individual needs effectively.

However, transparency is non-negotiable. Clients must provide informed consent, knowing exactly how their data will be used. Additionally, sensitive information must be securely managed to prevent any misuse or bias. The ultimate aim is to improve the coaching experience while protecting client confidentiality and respecting their rights.

How can I use AI coaching with a smaller carbon footprint?

Reducing the carbon footprint of AI coaching involves adopting energy-efficient technologies and embracing practices that consume fewer resources. Opt for models and delivery methods that are less demanding, such as digital or on-site coaching, as these tend to have a smaller environmental impact.

Additionally, integrating ethical AI design principles - like transparency and accountability - can help cut down on waste and energy consumption. Conducting environmental impact assessments during the technology's lifecycle is another step toward ensuring that sustainability remains a key focus.