Ethical AI Coaching: Consent Best Practices

Maintaining informed, ongoing consent and strict data safeguards ensures AI tools enhance coaching without replacing human judgment.

Ethical AI Coaching: Consent Best Practices

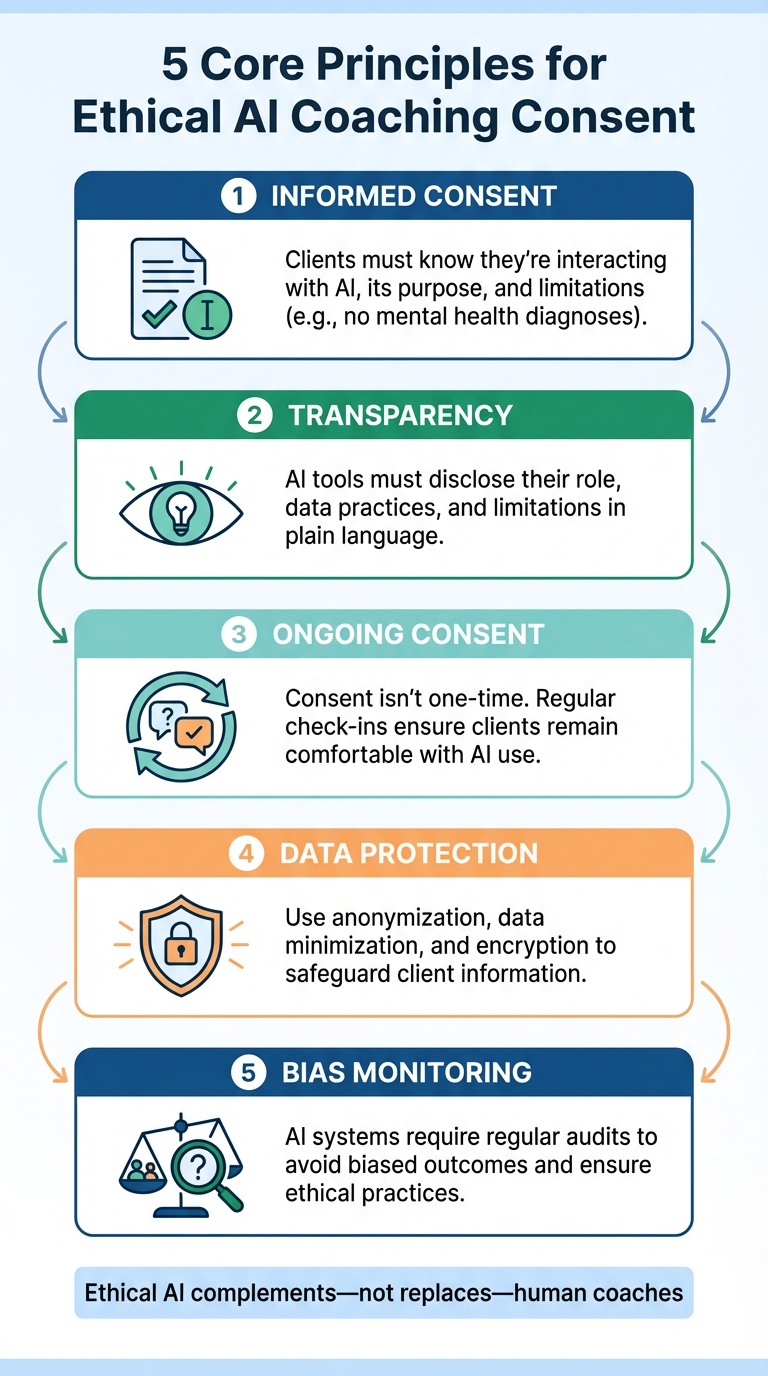

Ethical AI coaching depends on clear, informed consent. Clients must understand how AI tools function, what data they collect, and how AI can assist coaches while respecting limitations. This builds trust and preserves the human connection in coaching relationships. Key takeaways:

- Informed Consent: Clients should know they’re interacting with AI, its purpose, and its limitations (e.g., no mental health diagnoses).

- Transparency: AI tools must disclose their role, data practices, and limitations in plain language.

- Ongoing Consent: Consent isn’t one-time. Regular check-ins ensure clients remain comfortable with AI use.

- Data Protection: Use anonymization, data minimization, and encryption to safeguard client information.

- Bias Monitoring: AI systems require regular audits to avoid biased outcomes and ensure ethical practices.

The International Coaching Federation and platforms like Personos emphasize transparency, client autonomy, and ethical safeguards to ensure AI complements - not replaces - human coaches.

5 Core Principles for Ethical AI Coaching Consent

AI Coaching: The Ethical Dilemma

sbb-itb-f8fc6bf

Core Principles for Implementing Consent in AI Coaching

Ethical AI coaching is built on two key pillars: transparency and affirmative opt-in practices. These aren't just formalities - they form the foundation of trust and empower clients to make informed choices.

Transparency in AI Capabilities and Limitations

Clients need to know exactly what they’re dealing with. Every AI tool must clearly identify itself as artificial intelligence - not a human coach - at the beginning of each interaction, and this disclosure shouldn’t be buried in legal fine print [1]. It has to be upfront and repeated at the start of every session.

Take Protégée, Pearce Insights' AI tool, as an example. Since December 2024, it has been notifying users at the start of each session that they’re interacting with AI. It even explains its processes in real time, like when it reviews notes from previous sessions [2]. This ongoing transparency ensures clients remain fully aware of the AI’s involvement throughout their coaching experience.

It’s also essential to clearly outline what the AI can and cannot do. Avoid confusing technical jargon - use plain language to explain the tool’s scope and limitations. For instance, Humantelligence’s Ask Aura platform takes transparency a step further by removing demographic details during data processing to reduce bias. It also ensures that all AI-generated insights are backed by credible, traceable research [1].

Equally important is defining the role of the human coach. While the AI might assist by identifying patterns or providing insights, the ultimate responsibility for interpreting and acting on this information lies with the human coach [7].

Clear and Honest Opt-In Processes

Consent isn’t just about a one-time agreement - it’s about creating an ongoing, active partnership. The concept of "affirmative consent" is gaining traction, requiring AI tools to secure clear, enthusiastic approval from users before taking any action involving their data [8].

Transparency helps clients understand how the AI works, but honest opt-in processes ensure they actively choose to participate. This starts with mandatory tick-boxes during account creation. These should cover key permissions, such as confirming the user is 18 or older, agreeing to data storage policies, and acknowledging the AI’s limitations. Pair these checkboxes with a plain-English summary of the terms to make the process accessible [6].

Vivian Chung Easton, Clinical Product Lead at Blueprint, emphasizes the importance of treating consent as an ongoing dialogue:

"Consent isn't a box checked at intake; it's a conversation you keep having" [7].

To honor this principle, regular check-ins should be built into the process to confirm clients remain comfortable with the AI’s role. Additionally, clients should have easy-to-use options to opt out or switch to manual coaching at any time [7].

It’s also crucial to frame the AI’s role honestly. Present it as a tool that supports the human coach, not as a replacement. Blueprint, for example, uses scripted conversation guides to position AI as a way to:

"stay more focused on the client" [7].

By handling administrative tasks like note-taking, the AI enhances the human connection rather than undermining it.

At Personos (https://personos.ai), these principles are woven into every client interaction, ensuring users feel informed, empowered, and in control from the very beginning. Next, we’ll explore how these consent practices come to life during active coaching sessions.

Best Practices for Managing Consent During Coaching Sessions

Securing consent isn’t just a one-time event at the start of a coaching relationship. It’s an ongoing process that evolves alongside changes in AI technology and client needs. Keeping the dialogue open ensures trust and transparency. A strong foundation begins with formal documentation and clear options for clients to opt out if they choose.

Written Consent and Opt-Out Options

Start by obtaining formal, written consent before using any AI-powered tools in your sessions [5]. This agreement should clearly outline the AI’s role - whether it’s working behind the scenes for tasks like note-taking or directly interacting with the client. It should also explain how client data is stored, who has access to it, and when any recordings or transcripts will be deleted. Highlight certifications like HIPAA compliance or SOC 2 Type II to reassure clients about data security [7].

Clients should always have the option to decline or withdraw their consent for AI involvement without it affecting their coaching experience [5]. Make sure to offer alternatives, such as manual note-taking, so opting out feels like a genuine choice. Document every consent-related discussion, including any changes, to ensure full transparency and accountability [5].

Once consent is established, help clients feel informed and empowered by explaining AI's role, benefits, and risks.

Educating Clients on AI Benefits and Risks

Consent isn’t just about signing a form - it’s about creating understanding. Use simple, clear language to explain how the AI supports your work during sessions. For instance, you could say:

"The AI tool helps me take notes so I can stay more focused on you during sessions, capture details accurately, and see patterns I might otherwise miss. But I'm the one reviewing everything and making decisions about your care." - Vivian Chung Easton, LMFT, CHC [7]

Reassure clients that AI is a tool meant to enhance, not replace, your expertise [7]. With over 50,000 clinicians using AI-assisted platforms, these tools save practitioners an estimated 5 to 10 hours per week on administrative tasks [5]. This efficiency allows for more meaningful time spent with clients.

Be upfront about the limitations and risks of AI. Discuss issues like algorithmic bias, the potential for inaccuracies (sometimes referred to as "hallucinations"), and why AI is not suitable for crisis situations [9]. As the American Counseling Association emphasizes:

"AI is not a direct replacement for a human counselor and has its pros and cons. It's important that you understand the function and purpose of the AI to make an informed decision." [9]

Adopt a tiered approach to disclosure. Offer a basic explanation of AI usage for all clients, while providing more detailed technical information for those who are curious about how data is processed and secured [5]. Regular check-ins, such as asking, "How are you feeling about the AI tools we're using? Do you have any new questions or want to adjust our approach?" can help ensure clients remain comfortable and informed as the coaching relationship progresses.

At Personos (https://personos.ai), these practices foster a collaborative consent process, giving clients the confidence to make informed choices throughout their coaching journey.

Data Privacy and Security in AI Coaching

Once informed consent is secured, the next step is ensuring the protection of client data with strong privacy and security measures. These efforts are critical for maintaining transparency and honoring the trust clients place in you. Safeguarding data isn't just about meeting legal standards - it's about fulfilling your ethical responsibilities as a coach. Let's look at some specific ways to reduce and anonymize client data.

Data Minimization and Anonymization Techniques

Start by collecting only the data you truly need. Data minimization means gathering just enough information to achieve your coaching goals - nothing more. For instance, instead of asking for a client's full address, consider whether knowing their general region might be sufficient for your purposes [15].

Another key practice is setting clear data retention policies. Regularly purge data that’s no longer needed, and automate deletion processes where possible. If your AI platform generates temporary files during data transfers or processing, work with your technical team to delete these files immediately after use [15][16]. This helps reduce the risk of sensitive information being unnecessarily duplicated.

Anonymization is another powerful tool. Techniques like data masking or hashing can obscure personally identifiable information (PII) before it ever reaches the AI system [15]. Alternatively, you can train AI models using synthetic data - artificially created data that mimics real-world patterns without exposing actual client details. Advanced methods like differential privacy and federated learning also allow AI to learn from decentralized data sources without accessing raw, sensitive information [15].

Privacy-by-Design and Encryption Standards

To reinforce your data protection efforts, adopt systems designed with privacy in mind from the ground up. Choose AI tools that incorporate data minimization and encryption as part of their core development process. The NIST Privacy Framework can guide you in managing privacy risks throughout the AI lifecycle [11].

Encryption is key to securing data both in transit and at rest. Look for platforms with strong encryption protocols and implement API rate-limiting to prevent misuse [16]. Another smart move? Isolating your AI development environment from your general IT systems. Using virtual machines or containers can help mitigate security risks posed by third-party code [16].

It's also essential to stay updated on relevant regulations. For example, the Federal Trade Commission (FTC) Act requires transparency about AI usage to prevent consumer deception [12]. State laws are evolving, too. California’s CCPA gives users the right to opt out of data collection [14], while Colorado’s Artificial Intelligence Act (effective June 30, 2026) mandates disclosure for "high-risk" AI interactions [12][13]. If your coaching platform focuses on wellness or mental health, California’s SB 243 (effective January 1, 2026) requires suicide prevention measures and allows clients to take legal action for violations [13].

At Personos, we prioritize privacy-by-design principles to protect client data, ensuring ethical and effective AI coaching solutions that leverage personality psychology for better results.

Monitoring Bias, Accountability, and Ethical Compliance

Ensuring ethical AI coaching goes beyond safeguarding client data. It's just as critical to monitor AI systems for bias and establish accountability frameworks, especially as technology evolves and regulations shift.

Addressing Algorithmic Bias and Ensuring Fair Treatment

AI systems can unintentionally reflect the biases of their creators or the data they're trained on. Alicia Hullinger from the International Coaching Federation highlights this concern:

"Because bias is ingrained human nature, and because AI is ultimately a human creation, it can creep into AI algorithms as well" [18].

Bias can emerge at various stages - data collection, model training, or even post-deployment as user interactions evolve [17]. To address these challenges, employ strategies across three stages:

- Pre-processing: Modify or remove training data that could lead to discrimination, such as demographic identifiers [17].

- In-processing: Embed fairness metrics directly into the model's training process.

- Post-processing: Adjust outputs to ensure equitable results after the model has been trained [17].

However, fairness isn't a universal concept. The Information Commissioner's Office notes:

"fairness questions are highly contextual. They often require human deliberation and cannot always be addressed by automated means" [17].

This means documenting fairness trade-offs and tailoring decisions to your specific coaching context. Participatory design is another effective approach - engage marginalized groups to challenge assumptions and uncover potential risks [17]. Additionally, ensure the metrics your AI uses to define success with personality psychology (like "effective employee") don't inadvertently correlate with protected characteristics [17]. These steps align with a commitment to transparency and informed consent. This foundation allows for a deeper intersection of AI, coaching, and personality psychology to drive meaningful behavioral change.

Finally, systematic audits and oversight help maintain ethical compliance over time.

Establishing Regular Audits and Oversight Mechanisms

Start with industry-standard audits to ensure your systems meet data protection and ethical benchmarks [1]. A strong example comes from Humantelligence (Ask Aura), which in December 2025 implemented:

"a validated content pipeline"

that ties every coaching insight to peer-reviewed behavioral science. Their process includes annual SOC and ISO 27001 audits, reviews by certified coaches, and studies published in reputable journals like the Journal of Occupational and Organizational Psychology [1].

To maintain ethical integrity, form an ethics committee of domain experts to review AI-generated content [17]. Incorporate human oversight by having trained reviewers evaluate AI outputs before they reach clients. This is critical, as the International Coaching Federation warns:

"the introduction of AI bias can compromise the effectiveness and integrity of the process" [18].

Stay informed about regulatory updates. For instance, the UK Data (Use and Access) Act, enacted on June 19, 2025, introduced new guidelines on AI fairness and data protection [19]. Similarly, the International Coaching Federation released its "Artificial Intelligence (AI) Coaching Framework and Standards" in November 2024 to guide ethical AI practices [3]. Engaging in peer-learning and adhering to professional standards will help you navigate these changes effectively [18].

Lastly, implement continuous feedback mechanisms. Tools like Net Promoter Scores, user check-ins, and perceived growth metrics can track AI performance [1]. Testing your tools with diverse users across different contexts ensures positive outcomes for all clients [18]. These efforts help maintain ethical standards even as the regulatory landscape evolves.

At Personos, we prioritize regular audits and expert oversight to ensure our AI coaching tools remain fair, accountable, and ethically aligned with the latest standards.

Conclusion

Ethical AI coaching thrives on maintaining ongoing informed consent. This open and continuous communication ensures that clients stay in control, making their own decisions about their coaching experience and how AI contributes to it. It’s a foundation of trust that shapes every coaching interaction.

When you clearly outline how AI analyzes personality data, how it’s safeguarded, and the specific benefits it provides, you create an environment where technology supports - rather than substitutes - human connection. This approach strengthens trust within the coach-client relationship [4]. The International Coaching Federation’s AI Coaching Framework offers structured guidance for this integration, drawing insights from over 10,000 coaches worldwide [10].

Transparency is key, and the role of the coach remains central in interpreting AI outputs while ensuring human oversight. Coaches now act as Ethical AI Curators, managing AI tools while taking full accountability for decisions [20]. This evolving role aligns with the commitment to informed consent and transparent practices. As the American Counseling Association emphasizes:

"The responsibility for decisions and outcomes remains with the licensed professional" [9].

To maintain ethical standards, regular audits, minimizing bias, and adhering to privacy regulations are essential [1]. Practices like offering clear opt-out options, anonymizing data by default, and ensuring human-led alternatives for clients who decline AI assistance help balance innovation with integrity [21].

At Personos, we embed these principles into our personality-based coaching platform. By grounding AI-powered insights in transparency, consent, and human oversight, we ensure that ethical practices remain at the forefront - fostering trust and empowering every client interaction.

FAQs

How often should I renew consent for AI in coaching?

It's important to regularly renew consent when using AI in coaching. This ensures clients stay informed and comfortable with how AI plays a role in their coaching journey. To achieve this, establish clear procedures for periodic reviews of consent. These reviews give clients the chance to reassess and confirm their understanding of AI's involvement, fostering transparency and trust throughout the coaching process.

What should I ask about data storage and deletion?

When looking into AI coaching systems, it’s important to dig into how they handle personal data. Ask about where and how data is stored, what specific types of data are kept, and how long it’s retained. Make sure the system has strong security protocols and clear guidelines for data retention and deletion. Also, check if users can request their data to be removed and confirm that these requests are processed quickly and securely. Clear policies and accountability in these areas are critical for maintaining ethical standards and building trust with clients.

How can I tell if an AI coaching tool is biased?

To spot bias in an AI coaching tool, it's essential to assess how it handles diversity, fairness, and transparency. Pay attention to whether it incorporates sensitivity to different backgrounds, promotes inclusive practices, and provides clear explanations for its decisions. Look for active measures to reduce bias, such as regular audits and user feedback systems. Both developers and users play a role in ensuring the tool upholds fairness, accountability, and ethical guidelines.