Beyond Burnout: Using AI to Sustain Emotional Resilience in Social Work

Explore how AI tools enhance emotional resilience in social work, combating burnout and improving client care through innovative technology.

Beyond Burnout: Using AI to Sustain Emotional Resilience in Social Work

Burnout is a serious challenge in social work, with 73% of professionals reporting emotional exhaustion. The causes? Overwhelming caseloads, trauma exposure, and administrative burdens. AI offers solutions by reducing stress, automating tasks, and providing personalized tools to support emotional resilience. Key points include:

- Burnout Symptoms: Exhaustion, detachment, reduced accomplishment.

- AI Benefits: Early stress detection, task automation, and real-time coping strategies.

- Examples: Tools like the Allegheny Family Screening Tool prioritize cases, while apps like Wysa and Ginger assist with mental health.

- Ethical Use: Transparency, privacy protections, and human oversight are critical.

AI won't replace human judgment but can help social workers focus on what matters most: their clients. Thoughtful implementation ensures these tools align with professional values and improve care quality.

Emotional Resilience and AI's Impact on Social Work

Emotional Resilience in Social Work Settings

Emotional resilience is what helps social workers not just endure but thrive in the face of crises and ongoing exposure to trauma. It's more than simply coping - it's about learning from challenges and using those experiences to grow, both professionally and personally.

This resilience brings a host of benefits. Social workers who develop it often experience lower stress, greater confidence, and improved communication with clients and colleagues [4]. It allows them to recover from setbacks without dragging emotional baggage into future client interactions. In turn, they become role models for healthy coping strategies, creating the stability needed for effective interventions [4].

But building emotional resilience isn’t automatic - it takes ongoing effort. Social workers can strengthen their resilience through self-awareness and reflective practices, while organizations can contribute by fostering supportive team environments and demonstrating resilience through leadership [4]. These efforts not only improve individual well-being but also help retain skilled professionals in the field [3]. Research highlights that traits like self-awareness, hopefulness, critical thinking, and independence play a key role in reducing stigma and supporting resilience [3].

Now, with advancements in technology, AI tools are stepping in to complement these resilience-building efforts.

AI Tools for Emotional Intelligence and Support

AI-powered platforms are reshaping how social workers manage work-related stress and emotional challenges. These tools go beyond basic mood tracking, offering insights into emotional patterns, early warnings for burnout, and tailored interventions based on individual stress profiles.

Some AI platforms even integrate evidence-based techniques like Cognitive Behavioral Therapy or Dialectical Behavior Therapy, providing 24/7 support for managing stress and emotions proactively [5][6]. This kind of assistance can make a significant difference in helping social workers navigate the emotional demands of their roles.

AI is also making waves in social work education. Dr. Jamie Sundvall, Online MSW Program Director at Touro University Graduate School of Social Work, highlights its potential:

"By using AI in training, we can help students develop evidence-based clinical judgment in a controlled environment before they ever start practicing." [7]

This approach gives future social workers the chance to hone their emotional resilience and clinical skills before they face the complexities of real-world fieldwork, potentially reducing burnout early in their careers.

Ethical AI Use and Privacy Standards

As AI tools become more integrated into social work, ethical use and strong privacy protections are non-negotiable. The National Association of Social Workers (NASW) Code of Ethics offers clear guidelines on technology use:

"Social workers who use technology to provide social work services should obtain informed consent from the individuals using these services during the initial screening or interview and prior to initiating services." [2]

Informed consent is a cornerstone of ethical AI use. Social workers must clearly explain the benefits and risks of AI tools and respect clients' choices about whether to use them. Transparency is crucial - clients need to know how AI-generated insights are used in decision-making and that they can opt out at any time [2].

Protecting sensitive emotional and behavioral data is equally critical. Measures like encryption and rigorous security protocols are essential. The emerging U.S. AI Bill of Rights, being developed by the National Institute of Standards and Technology (NIST), addresses privacy, safety, algorithmic fairness, and the need for human oversight [8].

To ensure fairness, organizations must focus on eliminating bias in AI systems. This involves using diverse data sets, involving varied focus groups, and conducting thorough peer reviews [2][8]. This is particularly important for serving diverse populations, who may otherwise face disproportionate impacts from biased algorithms.

It’s important to remember that AI is meant to assist, not replace, professional judgment. As Yan, Ruan, and Jiang emphasize:

"Current AI is still far from effectively recognizing mental disorders and cannot replace clinicians' diagnoses in the near future." [2]

Similarly, Mark D. Lerner, Ph.D., Chairman of The National Center for Emotional Wellness, underscores this point:

"AI can never replace the professional practice of psychiatry, psychology, social work, or other mental health professions. The uniqueness of people will always surpass the rapidly growing cognitive abilities of computers." [9]

For AI to truly support social work, its implementation must be guided by ethical principles like transparency, fairness, and a commitment to doing no harm. Organizations should consider forming digital ethics committees to oversee AI use, provide training on interpreting AI-generated insights, and maintain strict monitoring to ensure these tools deliver on their promise without compromising professional values [2].

Can AI Help Social Workers Get Their Sundays Back?

AI Tools for Building Emotional Resilience

In response to the growing need to prevent burnout, AI tools are stepping in to provide targeted support for emotional resilience. Social workers, often operating in high-pressure environments, can now use these tools to manage stress, enhance emotional awareness, and maintain their well-being. These technologies integrate seamlessly into daily routines, offering practical, data-driven strategies to address challenges before they escalate. Here’s a look at how AI is making a difference.

AI Emotional Intelligence Assessment Tools

Emotional intelligence is a key factor in social work success. Research shows that 90% of top performers excel in this area [10]. AI-powered tools now make it easier to assess and improve emotional intelligence in real time.

Platforms like TalentSmartEQ's EQ Coach and Hyperspace provide innovative solutions. TalentSmartEQ uses data analysis to deliver personalized feedback and timely suggestions aligned with individual growth goals [11]. Meanwhile, Hyperspace employs virtual reality (VR) simulations, creating interactive environments where users can practice communication and refine emotional responses. These VR scenarios adapt dynamically, offering tailored training based on situational context [10].

The benefits of focusing on emotional intelligence are clear. Companies that invest in such training report a 20% increase in employee retention and up to a 35% improvement in team engagement [10]. As Dr. Ryan Baker, a Professor of Education Technology, puts it:

"Integrating AI in emotional intelligence assessments has transformed the way we evaluate and develop our workforce. The granular insights provided by these data-driven systems have been instrumental in driving personal and organizational growth." [10]

Custom Stress Management Apps

AI-powered stress management apps are changing the game by tracking mood, sleep, and stress patterns, then offering tailored recommendations. These apps go beyond generic advice, using machine learning to identify specific stress triggers and suggest effective interventions [12].

Organizations using these tools have seen impressive results: a 25% rise in employee satisfaction, a 30% drop in turnover rates, and significant savings in healthcare costs [13]. For instance, one global tech company reduced turnover by 20% within a year by implementing AI tools that identified overwork patterns and redistributed workloads. Similarly, a healthcare organization used predictive analytics to monitor stress levels, leading to a 30% increase in participation in wellness programs and a return of $2.73 for every dollar invested [13].

These apps analyze data points like work habits, communication trends, and even physiological metrics when paired with wearables. Based on this analysis, they provide actionable advice - whether it’s mindfulness exercises, schedule tweaks, or alerts for potential burnout. To complement these tools, AI-powered chatbots are also available to provide immediate, round-the-clock support.

AI Chatbots for Mental Health Support

AI chatbots are becoming a vital resource for mental health support, offering 24/7 assistance when traditional options aren’t accessible [14]. These tools provide anonymity, making it easier for people to open up about sensitive issues, and they’re more cost-effective than conventional therapy.

The market for AI healthcare chatbots is booming, with a projected value of $12.2 billion by 2034, growing at an annual rate of 23.9% [14]. Examples include Crisis Text Line, which has managed millions of crisis conversations, providing empathetic responses and connecting users to professional help [15]. Similarly, Ginger uses predictive analytics to detect early signs of mental health issues, prompting timely interventions from mental health coaches [15].

Other notable platforms like Wysa and Youper are gaining popularity. Wysa, rated 4.7/5 on the Google Play Store, supports users dealing with anxiety, depression, and stress [14]. Youper, available for $69.99 per year, has a 4/5 rating and offers personalized mental health support [14].

Alison Darcy, founder of Woebot Health, highlights the accessibility these tools offer:

"We know the majority of people who need care are not getting it. There's never been a greater need, and the tools available have never been as sophisticated as they are now. And it's not about how can we get people in the clinic. It's how can we actually get some of these tools out of the clinic and into the hands of-- of people." [16]

At the same time, social worker Monika Ostroff reminds us of the irreplaceable role of human connection:

"The way people heal is in connection... And they talk about this one moment where, you know, when you're-- as a human you've gone through something. And as you're describing that, you're looking at the person, sitting across from you, and there's a moment where that person just gets it." [16]

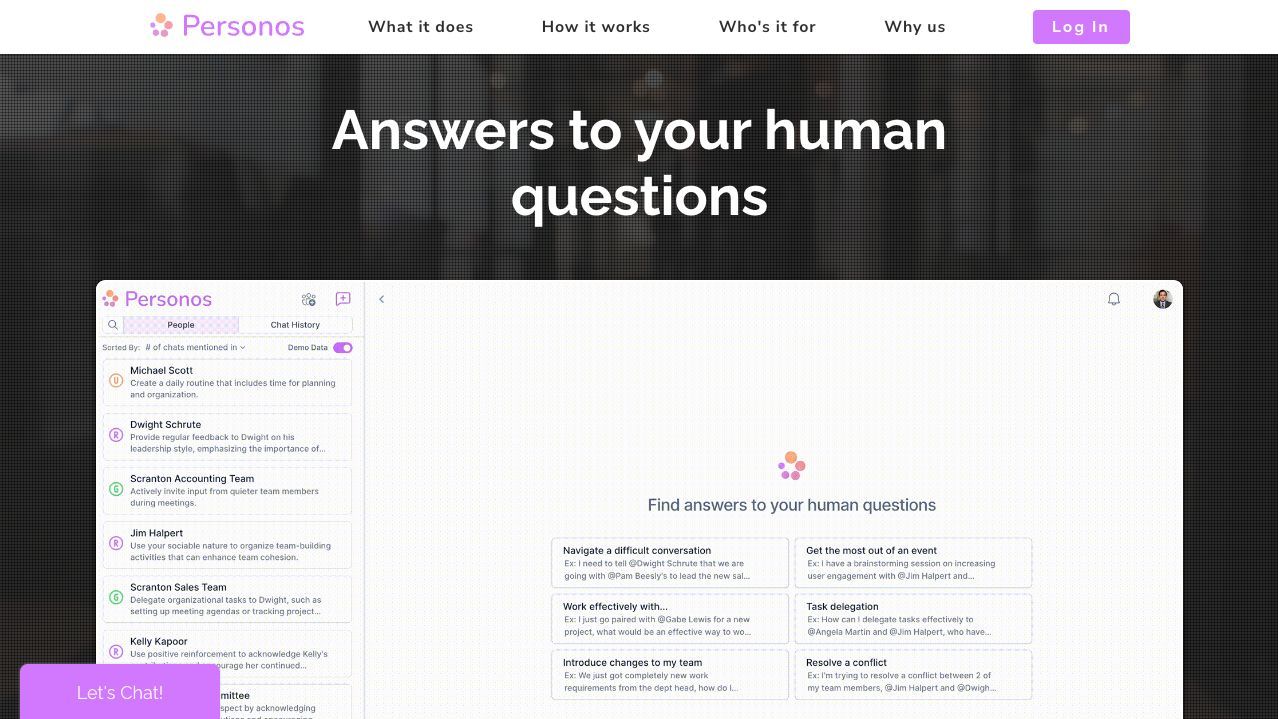

Personos: Communication Support for Social Workers

Personos is an AI tool designed to ease communication challenges and reduce stress in the workplace. By combining personality psychology with AI insights, it helps social workers navigate complex interactions more effectively.

The platform provides dynamic personality reports for individuals and teams, helping users understand varying communication styles. This understanding minimizes stress caused by miscommunications and fosters smoother interactions. For instance, Personos offers real-time prompts during tough conversations, making it easier to handle difficult discussions or collaborate across disciplines.

Additionally, Personos includes tools for analyzing group dynamics, which can prevent conflicts and improve workplace harmony. By promoting better communication, it helps reduce the emotional strain that often accompanies challenging interactions.

At just $9 per month for individuals, Personos is an affordable way to enhance communication skills and reduce workplace stress. For organizations, it offers tailored pricing and team-focused features, ensuring privacy and supporting both personal and professional growth. This tool aligns perfectly with efforts to build long-term emotional resilience in demanding environments.

sbb-itb-f8fc6bf

How to Use AI Tools in Social Work Practice

Incorporating AI tools into social work starts with setting clear goals, understanding what these tools can and cannot do, and regularly assessing their performance.

Choosing and Setting Up AI Tools

When selecting an AI tool, it’s essential to ensure it aligns with the specific problem you’re addressing. Consider factors like accuracy, scalability, ethical concerns, and how easily it can blend into your current systems. Knowing the tool’s strengths and limitations helps avoid unrealistic expectations [20].

Take time to research the tool’s track record. As Sam Ward, Head of AI Research and Development at Enate, advises:

"Research the vendor's history and customer reviews thoroughly. A reputable AI tool should have a proven track record with strong reviews from users in your industry. Demand real case studies that demonstrate clear results, backed by customer testimonials." [19]

Privacy and compliance are critical, especially in healthcare settings. General AI platforms often aren’t designed with HIPAA compliance in mind. Jeffrey Parsons, PhD, NCC, LPCC-S, stresses:

"Most of the platforms out there are not meant for health care practice; they aren't HIPAA compliant. You can't just plug client information into ChatGPT, because you're essentially feeding client data into the public domain." [22]

Social workers must ensure the AI tools they use adhere to federal and state confidentiality laws. Additionally, factor in total costs, including setup, training, and ongoing support. A good AI tool should integrate seamlessly into existing workflows without requiring extensive training. Sam Ward points out:

"An AI tool worth its salt should be intuitive and user-friendly. Complex processes should remain out of sight, allowing your team to integrate the tool into their existing workflows without requiring extensive training. Focus on tools with a clean interface and comprehensive, easy-to-understand documentation." [19]

Always request demos and case studies to evaluate the tool’s effectiveness in real-world scenarios.

Using Personos for Team Communication and Resilience

Once you’ve established your criteria, consider tools that enhance team collaboration. Personos is one such platform, offering personality-based insights to improve communication and reduce workplace stress. At $9 per month for individuals and with customizable organizational plans, it’s accessible for both personal and team use.

To get started, team members create personality profiles, which the platform uses to generate reports on communication styles and preferences. This is especially helpful in social work, where miscommunication can lead to stress and impact client outcomes. For example, Personos can analyze data from engagement surveys or focus groups to identify communication patterns, much like Cisco’s approach to tailoring workspaces for collaboration [21].

The platform’s insights help teams align better, enhancing emotional resilience - a key factor in preventing burnout. Regular feedback and leadership involvement ensure the tool adapts to the team’s evolving needs.

Adding AI Tools to Daily Work Routines

After setting up tools like Personos, the next step is integrating them into daily workflows to support resilience and efficiency. As Jeffrey Parsons reminds us:

"Any tool that we use - and it doesn't matter what it is - does not alleviate our responsibility to our clients and our responsibility for our decisions. We can't replace our personal competence by relying on a computer to do it for us." [22]

Here’s how AI tools can fit into a typical day:

- Morning: Use stress management apps to review data on sleep, mood, and stress patterns, identifying any early signs of burnout.

- Before client meetings: Leverage AI to fine-tune communication strategies, keeping in mind the potential for AI bias and inaccuracies [18].

- Documentation: AI can speed up note-taking. For instance, Beam’s Magic Notes tool, trialed in 2025, reduced documentation time for 91 staff members across three English councils. However, users noted occasional errors that needed manual corrections [18].

- End-of-day reflection: Track emotional patterns and stress levels to pinpoint triggers and develop personalized coping strategies [17].

Transparency with clients is crucial. Explain how AI supports their care, including its benefits and limitations, and ensure they have the option to opt out of AI-assisted processes [17] [22]. Staying updated on tool developments is equally important to maintain ethical and effective practice. These daily habits empower social workers to provide better care while staying grounded in their responsibilities.

Addressing AI Implementation Challenges

For social work practices already using AI to enhance emotional resilience, tackling implementation challenges is a top priority. While these tools bring plenty of potential, their successful adoption depends on overcoming a range of common hurdles. Knowing what these challenges are and having actionable solutions can mean the difference between a smooth transition and costly setbacks.

Common AI Adoption Obstacles

Integrating AI into social work comes with its fair share of challenges. Research highlights 24 specific barriers faced by Allied Health Professionals during AI implementation, many of which are highly relevant in social work environments [23].

One of the biggest concerns is privacy and confidentiality. Social workers handle highly sensitive client information, which must adhere to strict federal and state regulations. Ensuring compliance with these laws and ethical standards is critical to protecting client data.

Another issue is algorithmic bias. AI systems can unintentionally mirror and even magnify existing societal biases, which can disproportionately affect marginalized groups. As researchers Lee, Resnick, and Barton explain:

"Because machines can treat similarly-situated people and objects differently, research is starting to reveal some troubling examples in which the reality of algorithmic decision-making falls short of our expectations. Given this, some algorithms run the risk of replicating and even amplifying human biases, particularly those affecting protected groups" [2].

Limited digital literacy is another significant hurdle. Many professionals lack the technical expertise to evaluate AI tools or fully grasp their limitations. This knowledge gap can lead to unrealistic expectations or improper use of these systems.

Social workers also face workflow integration difficulties. Introducing AI tools often disrupts established processes, making it challenging to maintain the balance between operational efficiency and the human connection that lies at the heart of social work.

Finally, there's the challenge of maintaining professional judgment. While AI can offer valuable insights, it cannot replace the nuanced, relationship-based decision-making that defines social work. Instead, it should serve as a complement to human expertise.

These obstacles require thoughtful strategies and ethical guidelines to navigate effectively.

Ethical AI Implementation Guidelines

Overcoming these challenges starts with a structured, ethical approach that prioritizes transparency and client well-being. Here are some practical steps to guide implementation:

- Set up governance structures. Form a digital ethics steering committee that includes social workers, IT specialists, and ethics experts. This group should establish guiding principles for AI use and routinely review practices to ensure they align with ethical standards [2].

- Start small with non-clinical tasks. Begin by using AI for administrative functions, like scheduling or documentation, before moving on to client-facing applications. This phased approach allows staff to familiarize themselves with the technology in low-risk scenarios [23].

- Proactively address algorithmic bias. Use diverse focus groups to identify potential biases early, and subject AI systems to regular testing and peer reviews. This helps ensure fairness and accuracy in decision-making [2].

- Invest in digital literacy training. Incorporate courses on AI and ethical technology use into social work education. Additionally, offer workshops to help staff understand emerging technologies and their practical applications in the field [25].

- Document AI performance thoroughly. Keep detailed records of how AI systems perform, including their strengths and limitations. This helps professionals decide when to rely on AI insights and when to prioritize human judgment [2].

- Be transparent with clients. The NASW Code of Ethics emphasizes the importance of informed consent:

"Social workers who use technology to provide social work services should obtain informed consent from the individuals using these services during the initial screening or interview and prior to initiating services" [2].

- Strengthen data protection measures. Implement practices like data minimization, secure storage, and clear privacy policies. Provide clients with easy-to-understand explanations of how their data is processed and ensure they have ways to exercise their data rights [24].

- Establish accountability systems. Assign specific personnel to monitor AI decisions and conduct regular audits. This helps identify biases and ensures that AI recommendations align with professional standards [24].

- Highlight the benefits of AI. To ease resistance from staff, focus on how AI tools can enhance efficiency and improve care quality. Emphasize that these tools are designed to support - not replace - professional expertise [23].

Ultimately, successful AI adoption in social work depends on treating technology as a tool to enhance, not replace, human judgment. As professional guidelines remind us:

"Technology is constantly evolving, as is its use in various forms of social work practice. Social workers should keep apprised of the types of technology that are available and research best practices, risks, ethical challenges, and ways of managing them" [2].

Conclusion: AI for Sustained Emotional Health in Social Work

Social work is a field where burnout and emotional fatigue are constant hurdles. The overwhelming burden of administrative tasks often pulls social workers away from what truly matters - connecting with and supporting their clients. This reality highlights the growing role of AI in bringing the focus back to the human side of the profession.

Take Ealing Council in London, for example. During its 2024 pilot phase, the Magic Notes AI-powered system cut administrative workloads by an impressive 48% [27]. Jason Codrington emphasized that relieving these burdens is essential for maintaining focus and avoiding burnout [27]. The value of AI here isn't just about making processes faster - it's about preserving the emotional energy social workers need to provide meaningful care.

How AI Supports Social Workers

AI is doing more than just trimming to-do lists; it's helping social workers re-engage with their mission. By automating routine tasks, these tools free up time for deeper client interactions, while also offering insights that sharpen emotional awareness. For instance, AI can analyze speech, text, and even facial expressions to spot early signs of stress or burnout and suggest tailored stress management strategies [28]. Tools like Personos go a step further, monitoring team sentiment to help managers address conflicts before they escalate [26].

What’s Next for AI in Social Work?

Looking ahead, AI could become an even more powerful ally in high-pressure situations. Imagine real-time emotional analysis tools that provide immediate feedback and coping strategies during stressful moments [29]. AI-driven mentorship programs might also offer around-the-clock guidance on stress management, time efficiency, and decision-making [30]. Integrating wearable tech and mobile health data could further personalize these tools, helping social workers monitor their well-being and act proactively.

On a broader scale, AI could analyze local data to identify emerging social challenges, enabling the creation of targeted, community-focused interventions [1]. Anne-Laure Augeard, AI Project Manager at ESCP's Department of Academic Affairs, captures this potential perfectly:

"At ESCP, we see AI as a partner in fostering essential leadership skills such as decision-making, emotional intelligence, and strategic thinking. By integrating AI into our programmes, we empower students to lead with empathy and agility in an increasingly complex and dynamic world" [26].

The message is clear: AI isn't here to replace the compassion and empathy that define social work. Instead, it's a tool to amplify those qualities, allowing social workers to focus on building relationships, advocating for clients, and creating lasting change. To make this vision a reality, social work education must embrace these technologies, and professionals need training to interpret and apply AI insights effectively [1]. With thoughtful implementation, AI can help social workers thrive in their mission to support individuals and communities.

FAQs

How can AI help social workers maintain emotional resilience and avoid burnout?

AI has the potential to help social workers maintain emotional strength and avoid burnout by taking over repetitive tasks and cutting down on administrative duties. This shift enables them to dedicate more time and energy to their clients instead of getting bogged down by paperwork or logistics.

On top of that, AI-driven tools like stress management apps and platforms focused on emotional well-being can offer tailored mental health support. These tools provide valuable insights into stress levels and emotional health, empowering social workers to manage their own mental well-being while staying effective in their challenging roles.

Incorporating AI into their daily workflows can help social workers build lasting emotional resilience, allowing them to support others without sacrificing their own mental health.

What ethical factors should social workers consider when using AI in their practice?

When using AI in their practice, social workers must prioritize informed consent. This means ensuring clients fully understand how AI tools will be used in their care and what that entails. Alongside this, safeguarding privacy and data security is non-negotiable - client information must always remain protected.

It's equally important to maintain transparency about how AI operates and to ensure fairness in its decision-making processes. This helps prevent any discrimination or bias that might arise. Social workers should stay alert to the risks of bias in AI systems and actively work to address them.

Another critical responsibility is ensuring accountability. Social workers need to understand how AI reaches its decisions and be ready to step in if those decisions could negatively impact a client. Above all, the ethical use of AI must center on the well-being of clients, staying true to the core values and standards of the profession.

How can AI tools like Personos help social workers improve communication and manage stress?

AI tools such as Personos are transforming the way social workers engage with clients by enabling empathetic, real-time communication through AI-driven messaging systems. These tools help build trust and understanding, making it easier for social workers to establish meaningful connections with their clients.

Beyond improving communication, Personos also lightens the load of administrative tasks by automating repetitive processes. It provides tailored mental health support and can even detect early signs of burnout. This means social workers can focus more on their clients while also taking care of their own mental and emotional health, leading to a more balanced and supportive work environment.