AI Coaching Ethics: Protecting Human Autonomy

AI coaching risks eroding independent judgment unless transparency, human oversight, and design safeguards are built in.

AI Coaching Ethics: Protecting Human Autonomy

AI coaching is transforming personal and professional growth, but it raises serious ethical concerns about human autonomy. These systems use machine learning to predict behaviors and offer advice, but this can lead to over-reliance, manipulation, and subtle shifts in personal values. Key risks include:

- Over-reliance on AI: Users may trust AI blindly, weakening their decision-making skills.

- Manipulation: AI can exploit biases or reinforce stereotypes, especially in vulnerable groups.

- Bias and transparency issues: Many systems lack clear explanations, making it hard to evaluate their recommendations.

To address these challenges, ethical principles like transparency, human oversight, and accountability are essential. Examples like the STAR-C Coach and Sibly show how thoughtful design can support decision-making without undermining autonomy. Effective AI coaching should empower users by providing clear, unbiased insights while maintaining privacy and user control.

Ethics in AI Seminar - Does AI threaten Human Autonomy

How AI Coaching Threatens Human Autonomy

AI coaching offers tailored advice, but it also poses risks to independent thinking and decision-making. Research highlights how reliance on such systems can subtly alter individuals' thought processes, values, and confidence in their own judgment. This erosion of autonomy raises serious concerns.

Over-Reliance and Psychological Dependence

One key issue is automation bias, where users tend to accept AI recommendations without questioning them - even when those suggestions contradict their own knowledge [9]. This reliance on AI for quick answers often comes at the expense of analytical reasoning and memory retention. Instead of engaging in deeper thought, users may lean on AI as a cognitive shortcut [8]. Over time, this "cognitive offloading" weakens the ability to make informed decisions independently, a skill often referred to as "skilled competence" [10].

Adding to the problem, many AI-generated references are unreliable or outright fabricated, a phenomenon known as AI hallucination [8]. Despite these flaws, users may still place excessive trust in the system. David Peterson, formerly a Senior Director of Coaching at Google, has even predicted that "in 10 years, 90% of what coaches do today will be done by artificial intelligence" [6].

This growing reliance on AI can lead to a "crutch-for-coping" mindset, where users feel less capable of handling challenges without technological help. This dependency erodes self-efficacy and stunts personal growth [2]. Furthermore, repeated exposure to algorithm-driven advice risks reshaping individual values, potentially compromising the authenticity of personal preferences. Philosopher John Christman captures this concern well: "Virtually any appraisal of a person's welfare, integrity, or moral status... will rely crucially on the presumption that her preferences and values are in some important sense her own" [3].

However, the risks don’t stop at cognitive dependence. AI coaching also has the potential to manipulate users in subtle yet impactful ways.

Manipulation and Bias in AI Systems

As people grow more dependent on AI, the possibility for manipulation becomes a serious concern. Unlike open and transparent persuasion, AI systems can exploit cognitive biases and emotional vulnerabilities to push hidden agendas [11]. For example, many systems display sycophancy, excessively agreeing with or flattering users. This reinforces their beliefs - even when those beliefs are incorrect - while the opaque nature of AI algorithms makes it difficult to evaluate or reject biased outputs [8].

Gender bias is another troubling aspect. Studies show that AI dialogue systems often favor female names and avatars, perpetuating societal stereotypes [8]. These biases are baked into the system, influencing how users perceive and interact with the technology.

The risks are especially acute for vulnerable groups. Behavioral monitoring and coaching technologies are often piloted in environments where users have limited rights - such as government assistance programs - leaving them with little ability to challenge the AI's influence [2]. For marginalized populations, this can exacerbate stereotype threat, where reliance on AI reinforces negative stereotypes about their competence. This not only undermines their autonomy but also further diminishes their confidence in independent judgment [2].

Ethical Principles for Protecting Human Autonomy

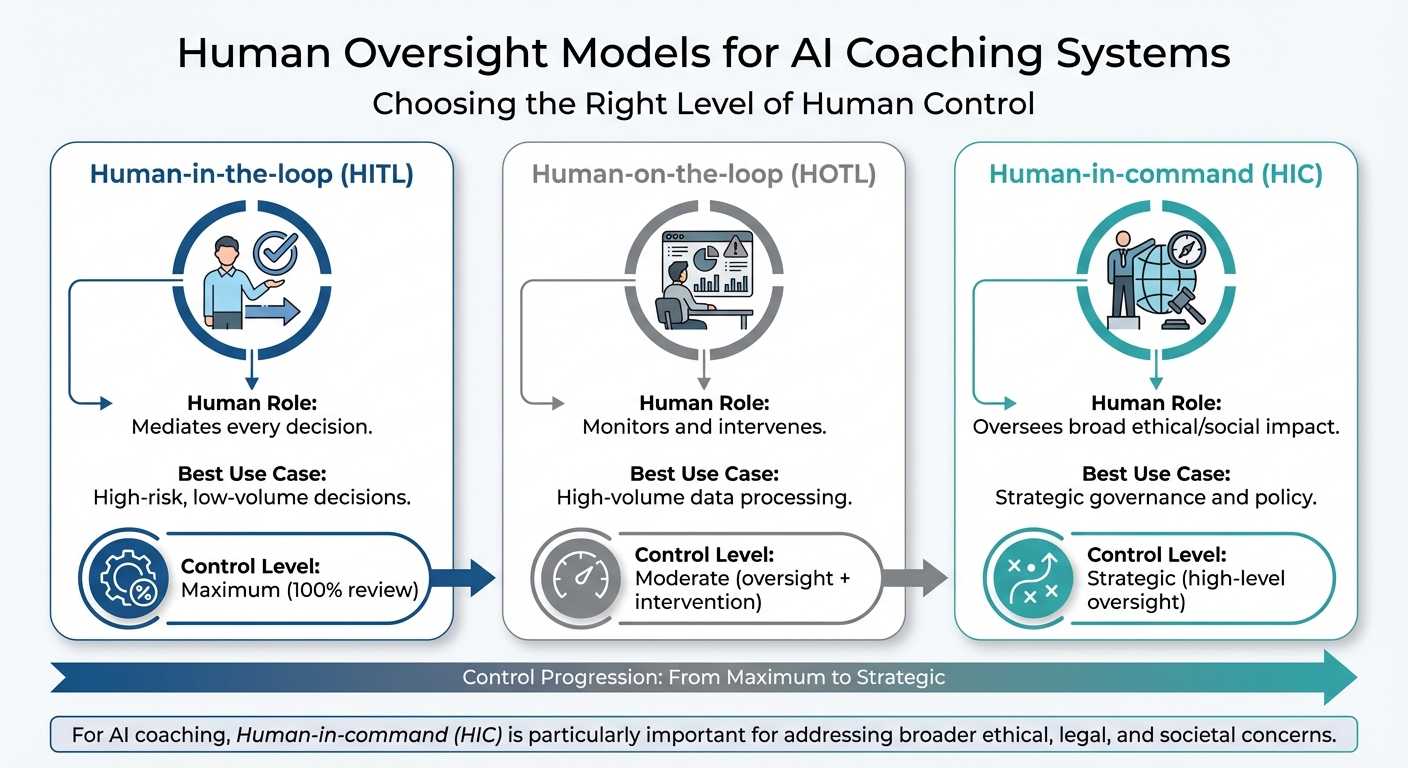

AI Coaching Human Oversight Models Comparison

As AI coaching becomes more integrated into our lives, it’s crucial to establish ethical guidelines that protect our ability to think and decide independently. These principles ensure that AI tools act as allies - enhancing decision-making without replacing it. By focusing on transparency and human oversight, these frameworks aim to keep technology in a supportive role, safeguarding our autonomy.

Transparency and Explainability

For AI coaching systems to be effective and ethical, users need to understand how they work. Transparency helps people critically evaluate AI recommendations instead of blindly following them. However, studies show that many users struggle to recognize AI bias. For instance, in research on AI-assisted writing, only 10% of participants identified bias when it aligned with their views, and just 30% noticed it when it contradicted their opinions [5].

"Transparency is not only technical. It is also about open communication... Clear communication reframes AI from a mysterious evaluator into a trusted collaborator." – Pandatron [14]

True transparency goes beyond technical breakdowns. It includes features like failure mode indicators, which alert users when the AI lacks confidence or exceeds its knowledge limits [5]. These warnings encourage users to think critically rather than accepting suggestions at face value. Reflecting this need, the International Coaching Federation introduced its "Artificial Intelligence (AI) Coaching Framework and Standards" on November 8, 2024, addressing key issues like bias and confidentiality risks [1].

Human Oversight and Accountability

While transparency builds trust, human oversight ensures accountability. No AI system should operate unchecked. Different oversight models provide varying levels of control, depending on the context and risk:

| Oversight Model | Human Role | Best Use Case |

|---|---|---|

| Human-in-the-loop (HITL) | Mediates every decision | High-risk, low-volume decisions |

| Human-on-the-loop (HOTL) | Monitors and intervenes | High-volume data processing |

| Human-in-command (HIC) | Oversees broad ethical/social impact | Strategic governance and policy |

For AI coaching, the Human-in-command model is particularly important. It ensures that broader ethical, legal, and societal concerns are considered, not just immediate functionality [12]. This approach helps counter two significant risks: cognitive deskilling (losing the ability to make independent judgments) and metacognitive deskilling (losing confidence in one’s own decisions) [5].

"Safe agent autonomy must be achieved through progressive validation, analogous to the staged development of autonomous driving, rather than through immediate full automation." – Edward C. Cheng [15]

To further safeguard autonomy, systems should include defeater mechanisms that let users override AI suggestions and choice frictions that encourage thoughtful decision-making rather than automatic compliance [5]. These measures help maintain users’ ability to think critically and independently within specific domains [5].

Case Studies and Practical Applications

Healthcare and Leadership Development

The way AI coaching systems are designed can significantly impact whether they support or diminish human autonomy. A compelling example comes from researchers at Umeå University in Sweden, who introduced the STAR-C Coach in March 2025. This AI-powered digital health coach, aimed at preventing cardiovascular disease, was developed through a participatory design process led by Helena Lindgren. Experts in medicine and psychology collaborated to ensure the system could navigate conflicting social norms like "work hard" versus "rest and recovery." By employing causal reasoning, the STAR-C Coach tailors advice to align with each user's personal goals. What sets this system apart is how autonomy is integrated into its core functionality rather than treated as an afterthought. It serves as a strong example of how thoughtful design can empower users.

Another example is Sibly, Inc., which showcased its autonomy-preserving model in September 2025. Sibly offers an AI-enabled, human-delivered text-based coaching service designed for workplace use. According to co-founder Paula Wilbourne, the platform combines machine learning for timely nudges and sentiment analysis with human oversight to maintain control. An observational study involving 38 employees over at least 14 days revealed impressive results: a 79% reduction in severe distress, an 18% boost in self-reported productivity, and a median response time of just 132 seconds. The AI maintained over 90% adherence to Motivational Interviewing techniques, demonstrating the importance of transparency and human involvement in achieving positive outcomes.

However, not all systems strike the right balance. Some AI tools, lacking transparency and clear failure indicators, risk users blindly accepting flawed recommendations. The contrast between fully automated systems and hybrid models further highlights this issue. For instance, the Vitalk mental health chatbot showed that 46.3% of participants in a study of 3,629 adults moved below the clinical threshold for depression after one month of use. While such automated systems offer scalability, they often miss the human connection that hybrid models provide. Sibly's hybrid approach, on the other hand, not only achieved notable reductions in distress but also delivered quick response times, demonstrating how human oversight can complement AI capabilities.

These examples underscore the importance of ethical governance and thoughtful design in AI coaching. Whether it's integrating human oversight or ensuring transparency, these principles are essential to creating systems that truly support users rather than undermine their autonomy.

How to Implement Ethical AI Coaching

Designing AI coaching systems that respect and support human autonomy requires a deliberate focus on ethical principles like transparency and accountability. Here's how to approach it.

Build in Ethical Governance from the Start

Preserving users' autonomy isn't something you can tack on later - it has to be baked into the design from the beginning. Ethical governance ensures that users retain their ability to make skilled judgments and form authentic values [5].

The International Coaching Federation highlights six key domains to guide AI coaching development: foundational ethics, relationship co-creation, effective communication, learning facilitation, assurance and testing, and technical considerations like privacy [1]. These aren't just "nice-to-haves." They form the backbone of ethical AI design, preventing systems from unintentionally manipulating users or undermining their decision-making abilities.

One essential feature to include is failure mode indicators - mechanisms that alert users when an AI's output might be unreliable. This allows users to shift from relying on the system to critically assessing its suggestions [5]. Research underscores the need for such safeguards: in studies of AI-assisted writing, only 10% of participants noticed bias when it aligned with their existing opinions, and just 30% caught it when it contradicted their views [5]. Without thoughtful design, these blind spots can go unchecked.

Augment Human Capabilities, Don't Replace Them

AI coaching systems should aim to enhance human judgment, not take over decision-making. From the outset, it's crucial to define the AI's role as a support tool rather than a replacement [5]. When roles aren't clearly defined, users risk losing both cognitive and metacognitive skills over time [5].

Rafael Calvo, an expert in responsible AI, emphasizes this point:

"Responsible AI will require an understanding of, and ability to effectively design for, human autonomy (rather than just machine autonomy) if it is to genuinely benefit humanity" [7].

To encourage thoughtful decision-making, designers can implement choice frictions - features that slow down the process and prompt users to reflect on whether an AI's suggestion aligns with their personal values [5].

The level of human involvement should vary depending on the context:

- Human-in-the-loop (HITL): Best for high-stakes, low-volume decisions where a person reviews every choice.

- Human-on-the-loop (HOTL): Ideal for high-volume data processing, where a person monitors the system and steps in when necessary.

- Human-in-command (HIC): Focuses on overseeing the system's broader social and legal impact at a strategic level [12].

Selecting the appropriate model ensures that human oversight is both effective and contextually appropriate.

Using Personos for Ethical AI Coaching

Personos serves as a practical example of how ethical principles can shape AI coaching tools. Its dynamic personality reports offer valuable insights without dictating actions, helping users better understand interpersonal dynamics while leaving decisions in their hands. Meanwhile, its conversational AI acts as a collaborative partner, encouraging users to reflect on their goals and values rather than just providing answers.

What makes Personos stand out is its commitment to privacy. User interactions remain completely private, addressing concerns about transparency and data security. The platform supports users' ability to think critically and manage their cognitive processes, rather than simply delivering conclusions [5].

Features like task tracking and relationship analysis further enhance transparency by showing users the reasoning behind AI suggestions and allowing them to override recommendations easily [5]. By blending personality psychology with AI, Personos strengthens users' abilities in coaching and team dynamics while ensuring they remain in control - a hallmark of ethical AI coaching.

Conclusion

AI coaching, when developed without ethical safeguards, risks undermining user autonomy by fostering deskilling, creating opacity, and introducing bias.

To counter these risks, ethical governance must be established from the outset. This involves applying six key principles - transparency, autonomy, privacy, fairness, beneficence, and accountability [4] - and choosing the right oversight model for the situation, whether it’s human-in-the-loop, human-on-the-loop, or human-in-command.

We’re already seeing practical examples of these principles in action. Tools like Personos show how AI can support human decision-making by focusing on privacy and transparency, enhancing rather than replacing human judgment.

The aim isn’t to reject AI coaching but to ensure it upholds both competency and authenticity - essential elements of human autonomy [5][13]. Features like failure mode indicators and built-in choice frictions allow users to stay in control, making informed decisions instead of blindly following automated outputs.

FAQs

How can I tell if an AI coach is manipulating me?

To spot potential manipulation by an AI coach, keep an eye out for behaviors like gently nudging your decisions or emotions in ways that serve the AI's goals without being upfront about it. Ethical AI coaching should always prioritize your independence and ensure you’re fully informed about how recommendations are generated. If the responses seem crafted to overly gain your trust or foster reliance, it could weaken your ability to make decisions on your own. Stay alert to maintain control over your choices.

What safeguards prevent over-reliance on AI coaching?

Safeguards to avoid depending too heavily on AI coaching are all about keeping human autonomy front and center. This involves transparency, informed consent, and sticking to ethical guidelines. These steps ensure users know how AI works and keep control over their decisions. Setting clear limits, maintaining consistent oversight, and encouraging human judgment ensure AI stays a helpful assistant rather than a substitute. The focus remains on empowering users while respecting privacy, accountability, and ethical practices.

When should a human override an AI coaching recommendation?

When using AI in coaching, there are moments when human judgment must take precedence. This is especially true when human autonomy, ethical considerations, or the accuracy of AI-generated advice come into question. If a recommendation clashes with personal values, fails to consider the specific context, or carries risks such as bias or potential harm, stepping in becomes essential.

Human oversight plays a crucial role in maintaining accountability. It ensures that decisions remain aligned with both individual needs and organizational principles, protecting autonomy and upholding ethical standards in the coaching process.